FP8 In-Depth Analysis: A High-Efficiency, Low-Power New Choice in the AI Computing Era; How Can Developers Avoid Core Performance Pitfalls?

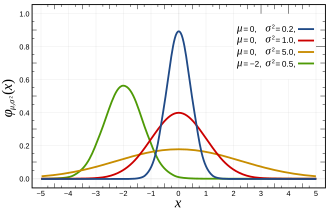

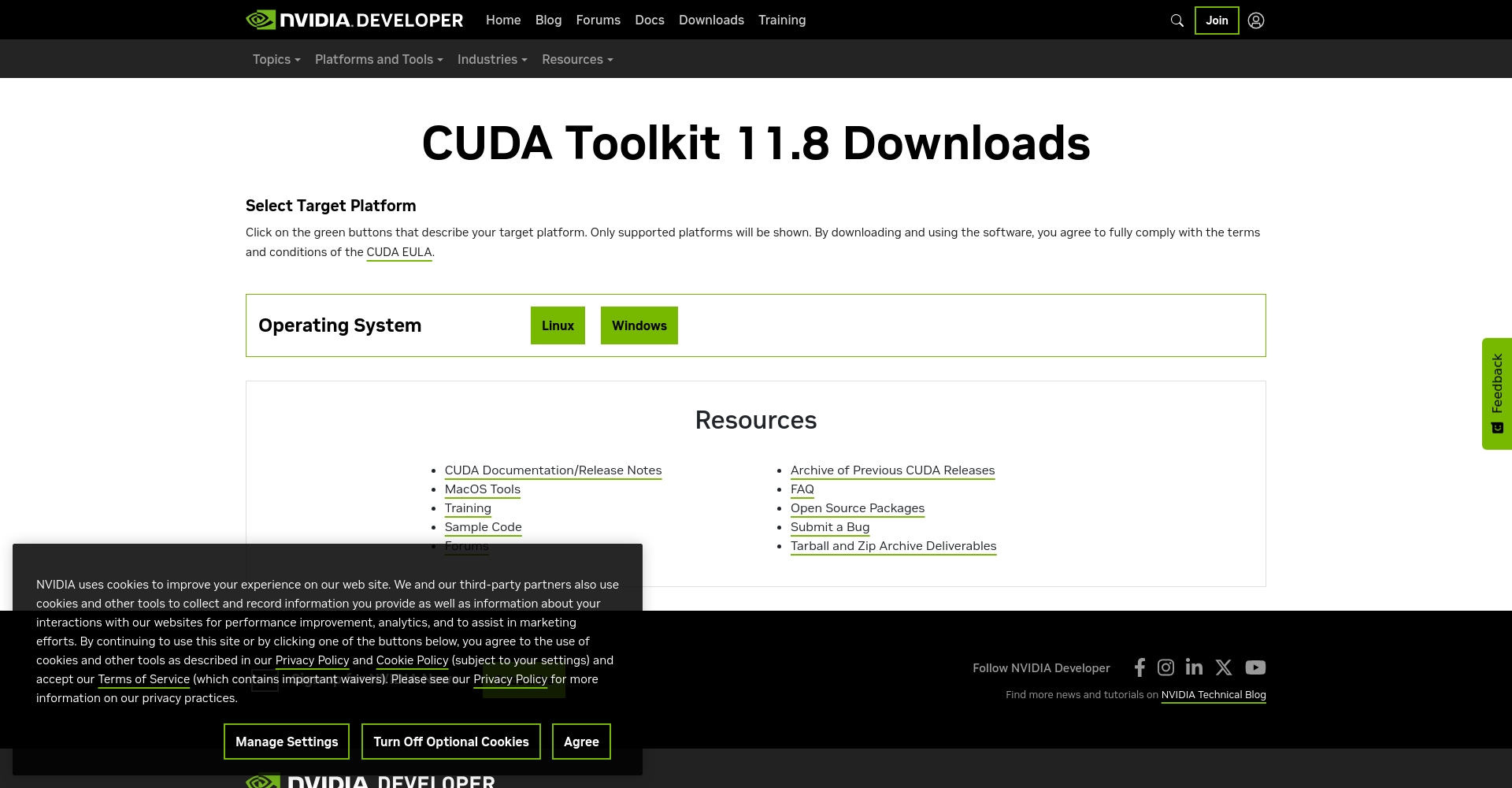

FP8 (8-bit floating-point) low-precision format has become the best choice for high computing power and low energy consumption in AI, and is gradually being natively supported by chips from NVIDIA, AMD, and other manufacturers. This article provides a detailed analysis of the principles, advantages, and risks of FP8, and compares it with mainstream formats such as BF16, FP16, FP32, and INT4...