AI Model Quantization and Acceleration: 5 Practical Techniques Explained to Help You Save Computing Power Efficiently

This article focuses on the perspective of news reporting.AI PlatformFive key techniques for enterprises in model compression and inference acceleration:They areQuantization, pruning, knowledge distillation, lightweight architecture design, compilers and hardware accelerationThe content covers mainstream methodologies, tool selection, industry best practices, and application examples, aiming to help developers.Efficiently saves computing resources, optimizes model deployment costs, and comprehensively improvesAILanding and penetration rateThis article is suitable for technical teams and AI product engineers to grasp cutting-edge model optimization ideas, and provides practical development guidelines and resource recommendations.

Overview of 5 key techniques for AI model compression and acceleration

| Skill Name | Operating principle | Applicable Scenarios | Representative tools/platforms | Available for cooperation | Typical effect |

|---|---|---|---|---|---|

| 1. Quantization | The 32/16-bit weights are converted to lower bits (8/4/2-bit integers), significantly reducing computation and storage. | Covering most NLP and CV models, LLM inference deployment | HuggingFace, ONNX, TensorRT, vLLM | AWS SageMaker, Azure ML, etc. | Compression speed of 2-16x, inference speed increased by 10X |

| 2. Pruning | Remove unimportant weights and connections to simplify the structure. | There are a lot of redundant deep models. | Torch Pruning, SparseGPT, TF Optimization | Mainstream cloud ML platforms | Compression speeds up to 1.5x and 10x, resulting in significant acceleration. |

| 3. Knowledge Distillation | Use a large model as a "teacher" to train a small model. | Requires compressed tasks and small device deployment | DistilBERT, MiniLM, MobileNet | HuggingFace, SageMaker, etc. | Volume 10~30%, efficiency 80~95% |

| 4. Lightweight structural design | Highly efficient model architecture with extremely simplified convolutions/channels | Mobile/IoT/Edge Deployment | MobileNet, EfficientNet, SqueezeNet | TF Lite, PyTorch Mobile | Reduced to 1/5 or even 1/10, low power consumption |

| 5. Compilers and Hardware Acceleration | Optimize the model specifically for efficient hardware instructions. | Cloud APIs, Edge AI, Extreme Concurrency | TensorRT, TVM, ONNX Runtime, vLLM | Cloud platform GPU/TPU/FPGA | Acceleration several times to more than 10 times |

Quantization: The preferred solution for compression and acceleration

Technical Principles Explanation

Quantization is achieved by replacing 32/16-bit floating-point weights with lower-precision integers (8/4/2 bits).It significantly reduces model size and speeds up inference, making it particularly suitable for high-concurrency and resource-constrained scenarios.

- Post-training quantization (PTQ)Suitable for general-purpose models, quick deployment, with some loss of accuracy.

- Quantization-Aware Training (QAT)Quantization during the training phase is suitable for applications requiring high accuracy.

Mainstream tools & platforms

- HuggingFace Transformers:Supports BitsAndBytes and Optimum automatic quantization, and works with QAT/PTQ.

- ONNX Runtime:Fully automated quantization export, compatible with mainstream frameworks and hardware.

- TensorRT:Suitable for the NVIDIA ecosystem, supporting optimal acceleration for FP16/INT8/INT4.

- vLLM:Optimized for large model inference and supports multiple quantization formats.

- It is compatible with major cloud platforms such as AWS SageMaker and Google Vertex AI with one click.

Industry Application Cases

- Smartphone voice assistants and image beautification models, with int8 quantization significantly extending battery life.

- Cloud-based inference technologies such as Meta Llama and OpenAI GPT automatically integrate low-bit quantization, reducing costs.

- Mainstream AI communities (such as Stable Diffusion) offer 4/2 bit versions of weights for easier multi-device inference.

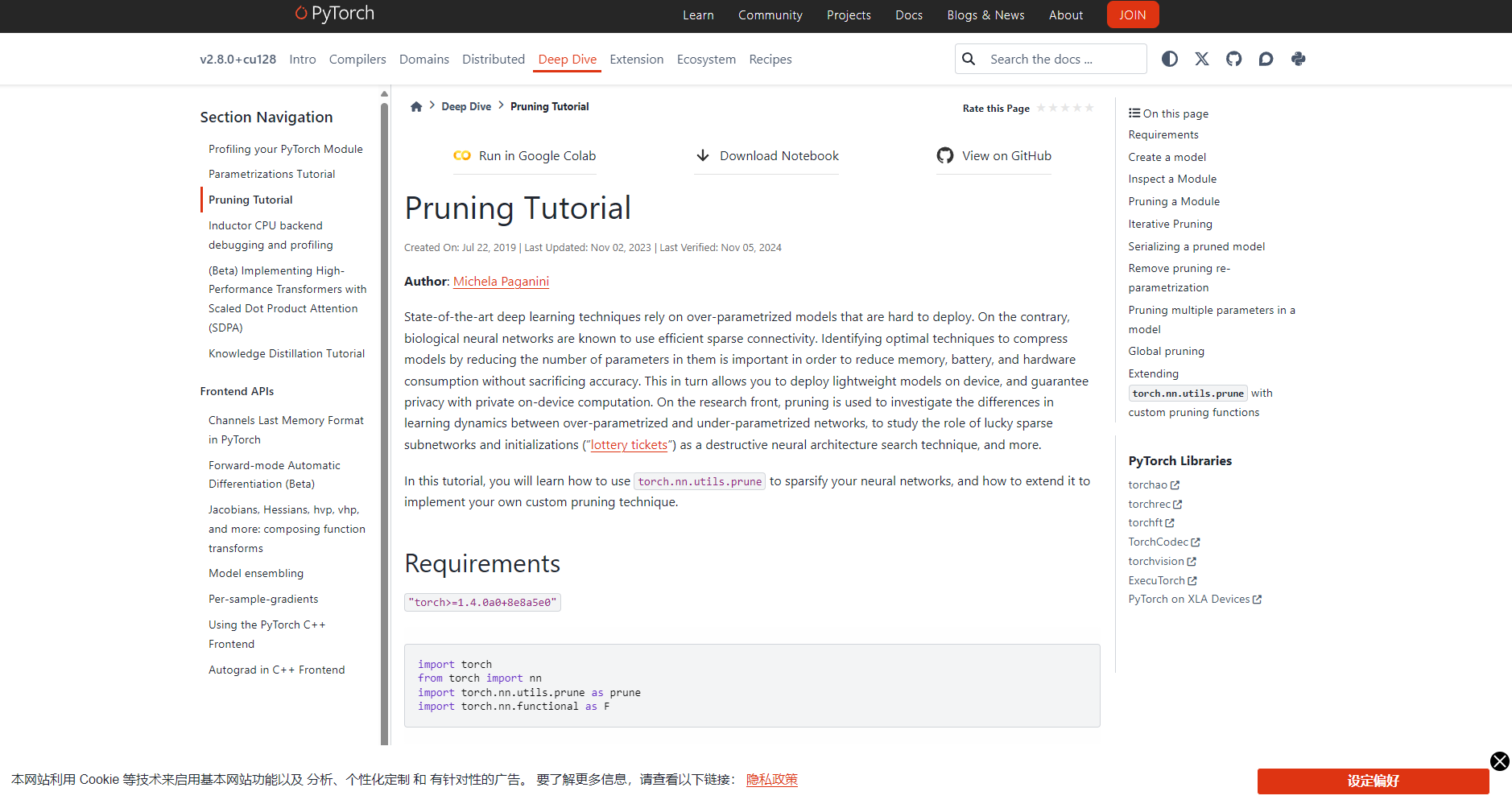

Pruning: Making Networks "Leaner" and Speeding Up Core Inference

Technical Principles

Pruning removes redundant/low-contribution weights.It retains only the core parameters, supporting a minimalist and efficient model. It offers unstructured (by weight) and structured (by channel/layer) methods, and requires fine-tuning to correct the loss after pruning.

Tool Cases

- PyTorch Pruning series, TensorFlow Model Optimization, and SparseGPT high-efficiency large model extreme scission framework.

- All major cloud platforms support task-based integration.

Application Examples

- OpenAI and Meta reduce the number of LLM parameters by half through structured pruning.

- AI companies often use model slimming to embed models into lightweight devices.

Knowledge distillation: A powerful tool for compression with a large capacity.

Core Concepts

By using a large model as the "teacher" and a small model as the "student," behaviors and knowledge are transmitted.Lightweight networks can approximate the main functions of the original model with only a few parameters, making them suitable for applications that are sensitive to latency and hardware.

Mainstream Models and Business Ecosystem

| Model | Features/Applications | Support tools |

|---|---|---|

| DistilBERT | Compressing BERT to 40%+, a representative of mainstream NLP distillation. | HuggingFace, etc. |

| MiniLM | Small size and high performance | Various open source tools |

| MobileNet/SqueezeNet | Lightweight structure combined with distillation, preferred for mobile devices | TF Lite, PyTorch Mobile |

Application scenarios

- Voice robots and translation APIs use small models for ultra-low latency online inference

- Lightweight embedded biometric and facial expression analysis models can be deployed quickly.

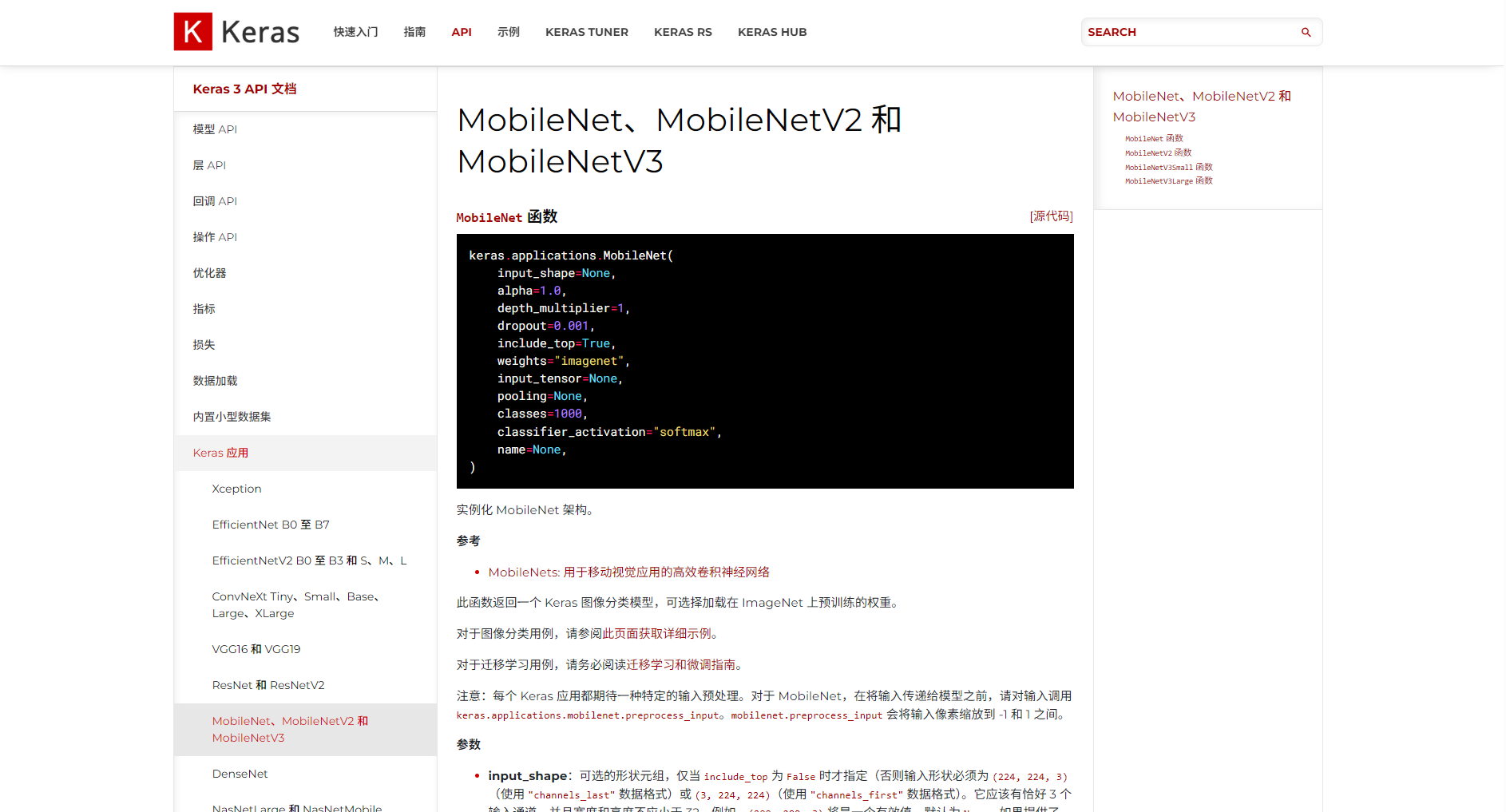

Lightweight structural design: AI engineering for the terminal

Key technologies

- Lightweight convolutional architecture design (such as grouped convolution, channel compression)

- Reduce the number of layers and compress the core size to improve computing efficiency.

Popular architectures and tools

| Architecture | characteristic | Tool Support | Applicable occasions |

|---|---|---|---|

| MobileNetV2/V3 | Depthwise separable convolution, low power consumption | TF Lite, PyTorch Mobile | Mobile/IoT |

| EfficientNet | Composite scaling, highly versatile | Mainstream APIs | Embedded deployment |

| SqueezeNet | Ultra-narrow fire module | EdgeML | Edge AI |

Examples of results

- The lightweight model requires only 1GB of RAM to perform independent inference, achieving the level of a 90%+ large model.

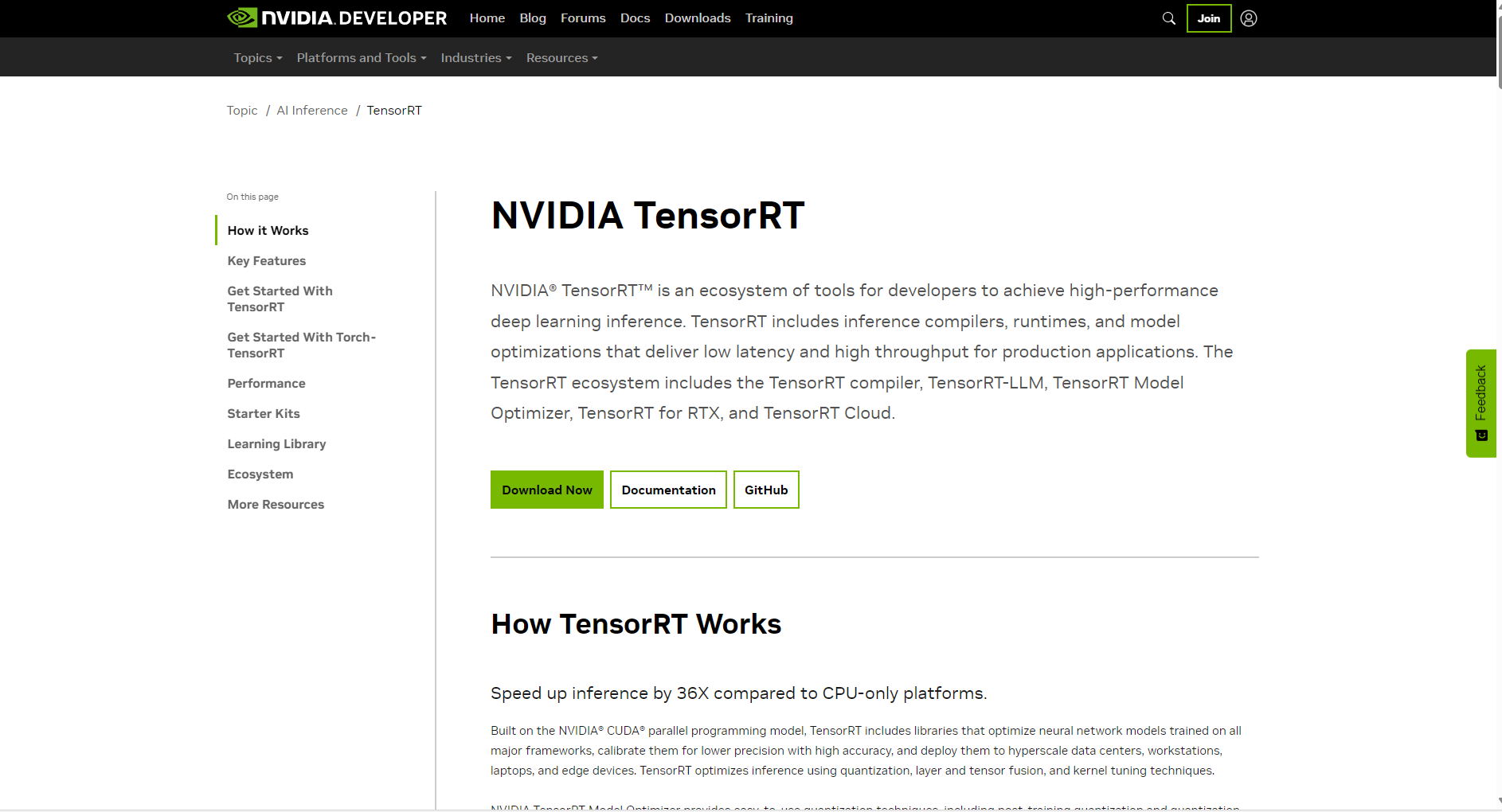

Compiler optimization & hardware acceleration: Making inference "fly"“

Core Principles

High-level compilers such as TensorRT/XLA/TVM translate model computations into native hardware-optimized instructions.This greatly improves throughput and concurrency performance. The ONNX standard facilitates multi-platform compatibility.

Mainstream applicable scenarios

- Enterprise-level APIs require ultra-high concurrency and low latency

- Real-time AI inference for autonomous driving/IoT/industrial control

- Elastic deployment of GPUs/FPGAs/NPUs in cloud services

Mainstream solutions and advantages

| plan | Advantages | Platform/Hardware |

|---|---|---|

| TensorRT | GPU adaptive optimization | NVIDIA family |

| ONNX Runtime | Extensive platform integration | CPU/GPU/FPGA/NPU |

| TVM | Custom graph optimization | Fully open source support |

| vLLM/Triton | Distributed high-efficiency reasoning | Large-scale cloud deployment |

Quantitative Compression: Future Trends and Development Guidelines

- Very low bit (1 bit/1.58 bit) quantizationModels like BinNet are becoming increasingly practical and extremely resource-efficient.

- The combination of pruning, quantization, and entropy coding further improves end-to-end efficiency (AlexNet can be compressed to the original 3% size).

- Based on AutoML and end-to-end pipelines, the development threshold continues to decrease, and mainstream cloud platforms now support the integrated deployment of automatic quantization, pruning, and distillation.

Developer Practical Guide & Advanced Resources

- Choose a compression method suitable for the scenario. For mobile phones/IoT, prioritize quantization and lightweight structures, while for large model APIs, combine compilers, pruning, and multiple compression methods.

- We used tools such as HuggingFace Optimum and ONNX Quantization to repeatedly compress and infer to evaluate accuracy, ensuring a consistent level of precision.

- By leveraging the integration capabilities of cloud platforms such as AWS SageMaker, we can improve delivery efficiency and maintain a leading edge in toolchains and formats.

- Focus on the latest high-efficiency inference and distributed quantization tools such as vLLM and OpenVINO to quickly deploy next-generation AI products.

Reference entry:HuggingFace Official Quantitative Guide、ONNX official quantitative documentation。

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...