What is edge computation? A comprehensive guide to the 6 major benefits of edge computation for AI applications.

Marginal operations(Edge Computing)Becoming a driving forceAIThe core driving force for the practical application of the Internet of Things (IoT). This article provides an in-depth analysis.Definition, structural features, and market status of edge computingAnd summarize its industry casesSix major advantages for AI applicationsIt significantly reduces latency, protects data privacy, saves bandwidth costs, improves reliability, accelerates deployment and maintenance, and supports industrial digital transformation. Finally, it summarizes mainstream edge AI tools, their adoption challenges, and development trends, serving as an important reference for enterprises and developers to position themselves for the new era of intelligence.

What is edge computing?

Definition and essence of marginal operations

Marginal operationsThis refers to processing data near the data source (such as terminal devices, gate devices, on-site servers, etc.), rather than transmitting all data to the remote cloud. Through...Deploy computing resources closer to the terminal.It can effectively reduce latency and improve safety and efficiency.

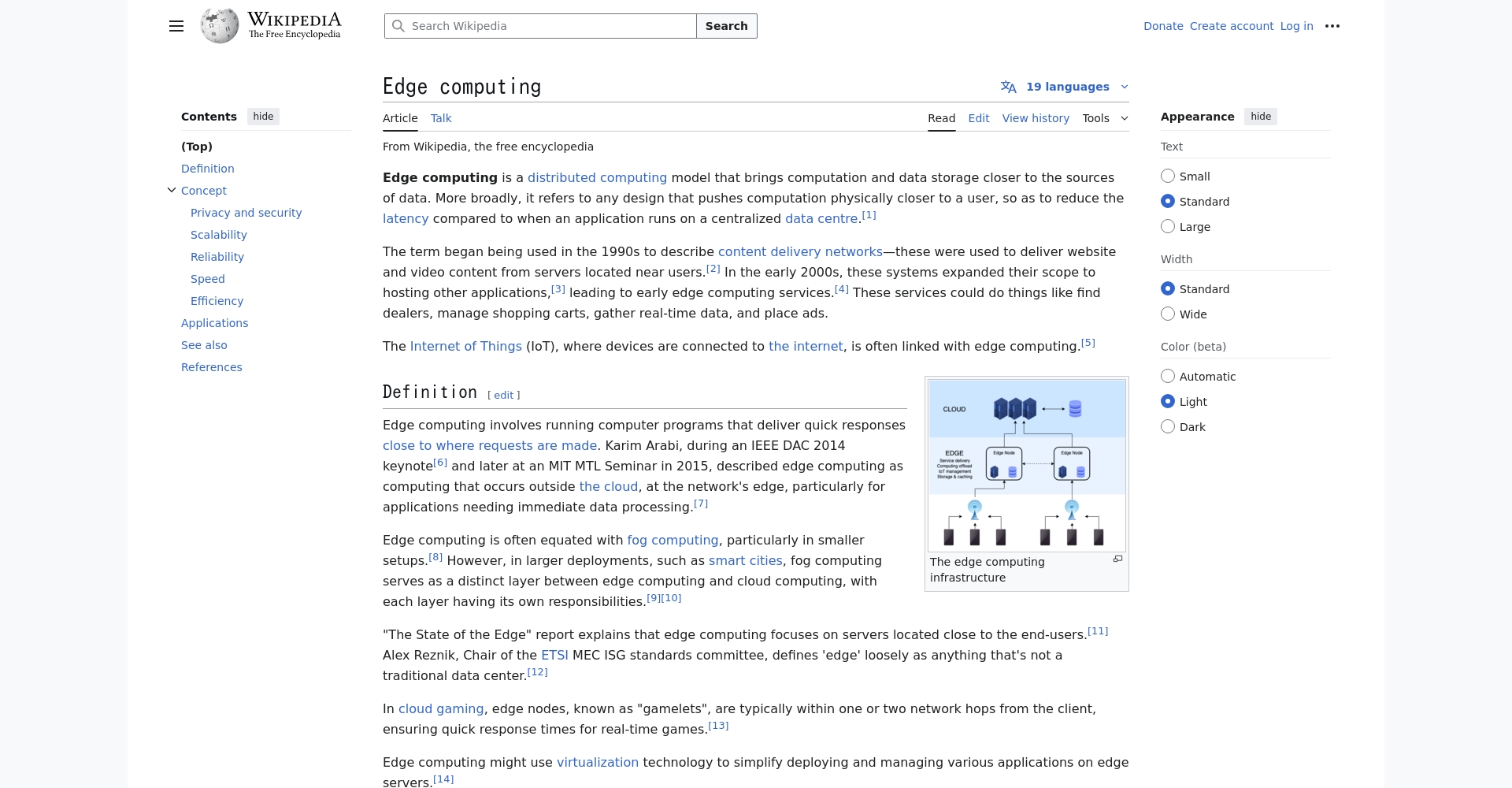

The difference between cloud computing and edge computing

| Indicators | Cloud computing | Marginal operations |

|---|---|---|

| Treatment location | Concentrated in remote data centers | Proximity to data generation equipment |

| Delay | There is network latency. | Extremely low latency, suitable for immediate response. |

| Safety and Control | Partial reliance on service providers strategy | Local data and processing can be controlled independently. |

| Applicable Scenarios | Large data volume, non-real-time requirements | Real-time response, data privacy, industrial automation |

The surge in IoT devices and the widespread adoption of AI technology have made edge computing one of the core technologies for intelligent solutions.

The current market status and industrial applications of edge computing in AI applications

Core Concepts and Industry Drivers

Gartner predicts that by 2025, the global number of [unclear - likely referring to a number of companies] will exceed [unclear - likely referring to a number of companies].50%'s business data will be processed at the edge rather than in a data center.Today, from self-driving cars and smart manufacturing to healthcare, retail, and security,Edge computing drives the rapid deployment of AI.。

| Industry | Edge computing application examples | Represents AI tools or products |

|---|---|---|

| Manufacturing | Real-time quality inspection on the production line and prediction of equipment malfunctions. | Siemens Industrial Edge |

| Smart City | Real-time traffic monitoring and city lighting management | AWS IoT Greengrass |

| Medical | Vital signs monitoring and real-time analysis of medical images | NVIDIA Clara Guardian |

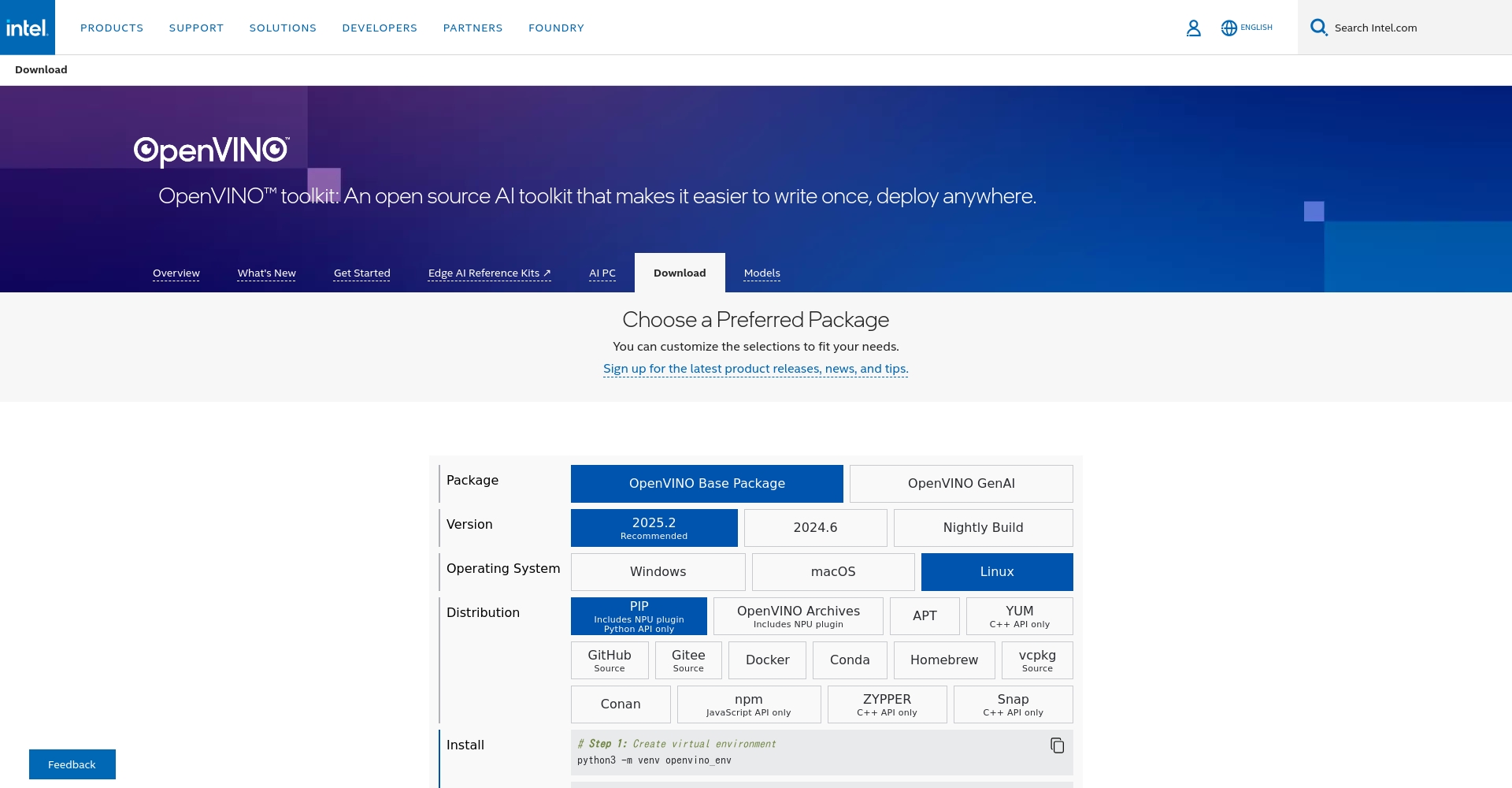

| Self-driving car | Road condition assessment and obstacle avoidance decision-making | Intel OpenVINO Toolkit |

| retail | Customer flow analysis, intelligent shop windows, real-time inventory tracking | Microsoft Azure Percept |

| Security monitoring | Intelligent facial recognition and event-triggered responses | Alibaba Cloud LinkIoT Edge |

Six major benefits of edge computing for AI applications

1. Significantly reduce data latency and support real-time AI decision-making.

For scenarios such as autonomous vehicles and smart factories,Immediate inference with millisecond-level returnEdge computing is crucial for real-time tasks such as collision avoidance and robotic arm movements. It allows AI to perform inferences directly on the field equipment.

2. Protecting data privacy and enhancing security

Processing sensitive data only at local terminals is possible.Reduce security risks during transmission and meet privacy compliance requirements such as GDPR.In medical settings, for example, NVIDIA Clara Guardian supports local AI image inference.

3. Agile scaling reduces bandwidth and storage costs.

Only key results are sent back to the cloud, saving bandwidth and storage for large-scale IoT applications.For example, AWS IoT Greengrass allows for on-site inference followed by centralized data transmission.

4. Improve the reliability and availability of AI models

Even if the cloud is interrupted,The local AI system can still make autonomous decisions and operate uninterrupted.It is extremely important for extreme scenarios such as energy and mining.

5. Accelerate the deployment and flexible operation and maintenance of AI products

Tools such as OpenVINO or LinkIoT Edge can be used to quickly deploy AI models across multiple devices.Achieve plug-and-play and remote maintenanceThis significantly shortens the innovation cycle.

6. Supports cross-domain hybrid architecture to facilitate industrial digital transformation.

Edge computing can flexibly integrate hybrid cloud and public/private cloud.Helping AI solutions adapt to various complex business operations and drive digital transformation and upgrading.Tools such as Microsoft Azure Percept are highly favored by enterprises.

A list of edge computing AI tools

| Tools/Products | Applicable Scenarios | Main features | link |

|---|---|---|---|

| Siemens Industrial Edge | Industrial automation | Modular deployment, industrial networking, edge AI detection | Official website |

| NVIDIA Clara Guardian | Medical and health | Real-time video analysis, status monitoring, and local data compliance | Official website |

| AWS IoT Greengrass | Smart cities, manufacturing | AI local inference, cloud collaboration, cross-platform compatibility | Official website |

| Intel OpenVINO Toolkit | Self-driving cars, security | Multi-chip inference optimization and local AI deployment | Official website |

| Alibaba Cloud LinkIoT Edge | Equipment connection, security | Massive device management, local intelligent computing, and remote OTA (Over-The-Air) updates. | Official website |

| Microsoft Azure Percept | Retail, Edge AI | Pre-configured AI hardware, visual and voice perception, and cloud collaboration. | Official website |

Challenges and future trends of implementing edge computing

challenge

- Local equipment resources are limited, and a balance needs to be struck between AI computing performance and cost.

- Ecological integration and agreement standards still need to be unified.

- Upgrading and maintaining multi-terminal AI models has a relatively high barrier to entry.

- Cybersecurity requires multi-layered protection.

Future Trends

- Edge AI will be deeply integrated with 5G/6G to create new scenarios with extremely low latency.

- The AI+IoT architecture continues to evolve, significantly enhancing the level of intelligent services in industries.

- Low-threshold edge AI development tools will become mainstream.

AI applications have become a driving force for industrial digital transformation.Edge computing, with its advantages of low latency, high security, and high toughness, is redefining the application scenarios of AI.In the future, as ecosystems and tools become increasingly sophisticated,Edge computing + AI will be widely adopted across various industries.To maximize the value of data, we recommend that enterprises and developers make early deployments and make good use of professional tools to seize the opportunities presented by the intelligent wave.

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...