Ollama is a one-stop local service.Large model platformIt focuses on easy deployment, data privacy, and multi-model compatibility. Supports multiple platforms including Windows, macOS, and Linux; integrates 30+ mainstream models such as Llama3, Mistral, and Gemma; supports one-click offline execution and is fully localized, making it suitable for individuals, businesses, and R&D teams.Free and open sourceWith strong API development compatibility, it is the preferred solution for building independent AI applications and ensuring data security.

In today's era of rapid development in AI applications,Local deployment of large language models (LLM)Demand is surging. Ollama, an AI tool focused on efficient, localized deployment, has become a popular platform attracting widespread attention and application from developers and data security-sensitive enterprises worldwide.Ollama Official WebsiteAdhering to the concept of "simplified startup and local control", it brings a new trend to the application of large-scale models with private, customized and controllable data.

Ollama's main functions

Ollama does not directly provide its own large language model, but instead builds...Local Large Language Model-Driven and Management PlatformIt supports mainstream models such as Meta Llama 3, Mistral, and Gemma, and features multi-system compatibility, low resource consumption, ease of operation, and a rich API ecosystem.

- Rapid deployment across multiple platforms:Supports Windows, macOS, Linux, Docker, and Raspberry Pi.

- Built-in 30+ mainstream models:One-click switching, supports custom extensions.

- 4GB/8GB of video memory is sufficient to run mainstream models:Both CPU and GPU can be scheduled.

- Enrich the developer ecosystem:REST API, Python/JS SDK, compatible with LangChain, RAG, etc.

- Fully localized:Data/inference is kept private and offline.

- Visual interface:It integrates with Open WebUI/Chatbox, providing a ChatGPT-like experience.

- The community is active and has a wide variety of plugins.Modelfile personalization configuration.

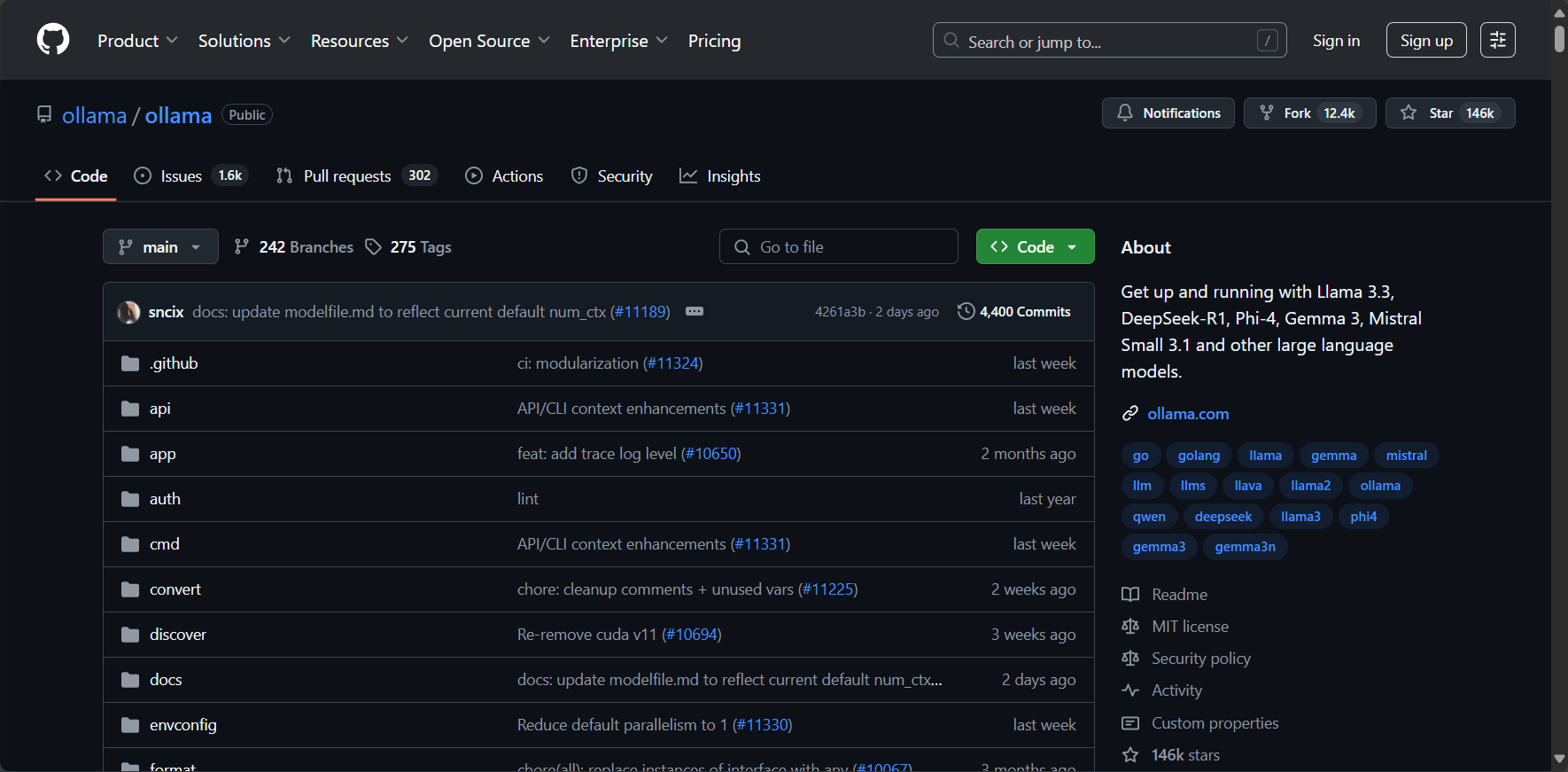

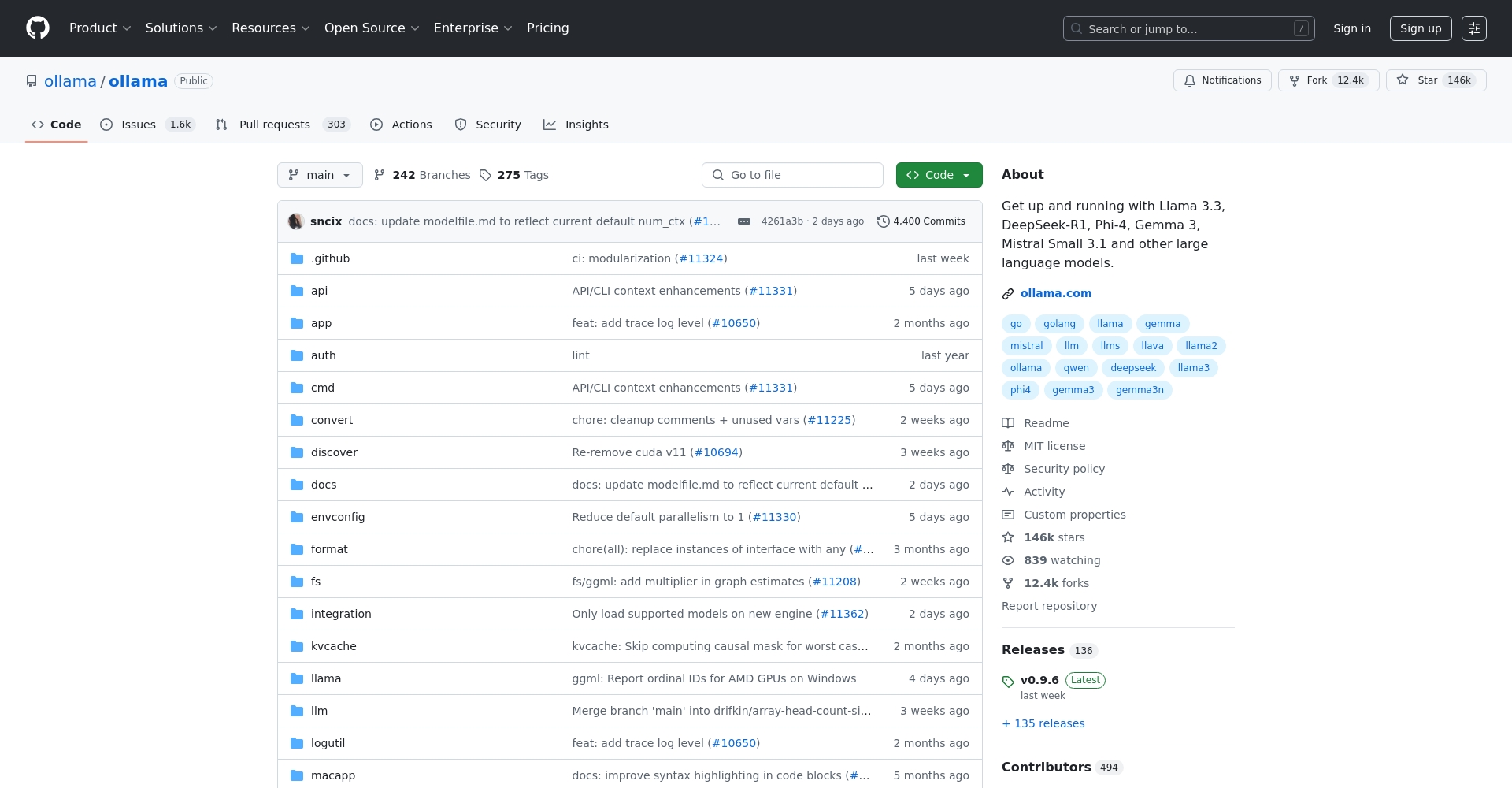

For detailed functions, please seeOllama Official Website及GitHub documentation。

Function Overview (Table)

| Functional categories | Explanation and Examples |

|---|---|

| Platform compatibility | Multi-system support for Windows, macOS, Linux, Docker, and Raspberry Pi |

| Model Management | 30+ models, including Llama 3, Gemma, Mistral, etc. |

| Resource optimization | It can run with 4-8GB of video memory and is compatible with both CPU and GPU. |

| Data privacy | Runs locally, data is completely private |

| API Integration | REST API/SDK/RAG/Chatbox/Open WebUI |

| GUI frontend | Visualizations such as Open WebUI, and ChatGPT-like |

| Developer ecosystem | Modelfile, plugins, and community activity |

Ollama's pricing and plans

Ollama is open source and free; all mainstream functions and models are available free of charge.

Users canOfficial websiteSelf-service downloads, no commercial subscriptions or hidden fees. Enterprise customization and self-hosting also require no licensing fees, and most mainstream models are open source (please note the copyright terms for some large models).

| Use cases | price | Remark |

|---|---|---|

| Software Download | free | Multi-platform |

| Mainstream model inference | free | Llama3, etc. |

| Community support | free | GitHub/Discord |

| Enterprise self-management | free | No proprietary interface |

| Customization/Plugin | free | Open source community |

How to use Ollam

Extremely simple installation and one-click experience, suitable for beginners and developers.

- Hardware preparation:8GB of RAM or more, with an Nvidia graphics card being even better.

- Install:

Download and install packages for Windows/macOS, and use Linux commands:curl -fsSL https://ollama.com/install.sh | shAlternatively, you can use Docker.

See detailsInstallation page。 - Pull/Run Model:

ollama pull llama3ollama run llama3,ollama listView the model.ollama psQuery task. - Development Integration (API):

curl -X POST http://localhost:11434/api/generate -d '{"model": "llama3", "prompt": "Please summarize the following:"}'' - Visual interface:We recommend using front-ends such as Open WebUI and Chatbox. (See below)Integration Project。

| step | operate | Example command |

|---|---|---|

| Install | System Download/Command Line/Docker | curl -fsSL https://ollama.com/install.sh | sh |

| Pull Model | ollama pull | ollama pull llama3 |

| Operating Model | ollama run | ollama run llama3 |

| Multi-model management | ollama list/ps/rm | ollama list / ollama ps / ollama rm llama3 |

| API documentation | GitHub/Official Website | API documentation |

Who is Ollama suitable for?

- AI R&D/Programming enthusiasts:Local experimentation and algorithm innovation.

- Small and medium-sized enterprises/security-sensitive organizations:All data is kept private in the office.

- NLU/Multimodal Researcher:Seamless model switching.

- Personal geek/AI for personal use:Local knowledge base, AI assistant.

- Startup/Development Teams:It serves as a one-stop integrated AI backend engine.

Applicable ScenariosFeatures include: enterprise local knowledge base, document Q&A, PDF processing, code assistant, image recognition, multimodal RAG, customer service robot, etc.

Other highlights and community ecology

List of Mainstream Supported Models

| Model Name | Parameter size | Space occupied | Highlights and Uses |

|---|---|---|---|

| Llama 3 | 8B+ | 4.7GB+ | Comprehensive Q&A, Multilingual |

| Mistral | 7B | 4.1GB | High performance, English preferred |

| Gemma | 1B-27B | 815MB~17GB | Multilingual and efficient |

| DeepSeek | 7B+ | 4.7GB+ | Information retrieval strength |

| LLaVA | 7B | 4.5GB | Image/Multimodal |

| Qwen | 7B+ | 4.3GB+ | Multilingual, Chinese preferred |

| Starling | 7B | 4.1GB | Dialogue fine-tuning |

- Open WebUI/Chatbox/LibreChatIt includes 20+ third-party GUIs, and a plugin ecosystem for PDF, web page scraping, knowledge bases, and more.

- With 140,000 stars on GitHub, open API/SDK/plugins, and active community support.

- It is compatible with mainstream frameworks such as LangChain, LlamaIndex, and Spring AI.

Frequently Asked Questions

Q: Can Ollama run "completely local"?

Yes, Ollam supports offline deployment and inference throughout the entire process, and the data (100%) is not shared externally, ensuring optimal privacy.

Q: What is the essential difference between Ollama and ChatGPT?

| aspect | Ollama | Cloud-based platforms such as ChatGPT |

|---|---|---|

| deploy | Local private offline | public cloud |

| Data security | 100% unit | Upload to cloud is required |

| cost | Free and open source | Subscription/Pay-as-you-go |

| Custom | Powerful, plug-in-based | limited |

| Model selection | 30+ self-selected | Official designation |

Q: What are the hardware requirements for large models?

For the 7B model, 8GB of RAM and 4GB of VRAM are sufficient, and the CPU is usable, but the GPU is even better; for the 13B model, 16GB of RAM and 8GB of VRAM are recommended, and hard drive space needs to be reserved.

| Model size | Memory | Video memory | illustrate |

|---|---|---|---|

| 7B | 8GB | 4GB | CPU/GPU dual compatibility |

| 13B | 16 GB | 8GB | Better performance |

| 33/70B | 32GB+ | 16GB+ | High performance/server |

Conclusion

With the advancement of the AI big data model waveWith its minimalist local deployment, complete privacy, and open compatibility, Ollama has become the preferred platform for AI developers, enterprises, and geeks worldwide. Whether you are an individual user, a business, or a startup teamOllama Official WebsiteBoth provide a solid foundation and flexible expansion for your large-scale AI model applications and innovations. Follow the community to get the latest plug-in models, enterprise-level solutions, and more ecosystem updates.

data statistics

Data evaluation

This site's AI-powered navigation is provided by Miao.OllamaAll external links originate from the internet, and their accuracy and completeness are not guaranteed. Furthermore, AI Miao Navigation does not have actual control over the content of these external links. As of 5:30 PM on July 14, 2025, the content on this webpage was compliant and legal. If any content on the webpage becomes illegal in the future, you can directly contact the website administrator for deletion. AI Miao Navigation assumes no responsibility.

Relevant Navigation

Lark Smart Partner

Waymark

LangGPT

Swapface

MindMaster (Edraw Mind Map)

AI-Inspired PPT

Panda Office