In recent years, artificial intelligence and natural language processing technologies have developed rapidly, and HuggingFace, which aggregates innovation and drives the industry ecosystem, has undoubtedly become the focus of industry attention.As the most important in the worldOpen source AI platformHuggingFace is not only hailed as the "GitHub of AI," but has also become a hub for AI training models and industry collaboration.This report will provide a comprehensive overview of HuggingFace's core functions, pricing structure, practical applications, target audience, and significant impact on industry development.

HuggingFace Platform Introduction

Founded in 2016 and headquartered in New York, USA, HuggingFace initially started as a chatbot startup targeting teenagers, but quickly transformed into an open-source community platform for the AI industry due to its open-source strategy.Today, HuggingFace brings together developers, researchers, and companies from around the world to provide comprehensive technical and community support for AI research, industrial transformation, and innovation and entrepreneurship.

Visit the official HuggingFace platform:https://huggingface.co/

Its biggest feature is "openness and sharing". It not only opens up AI training models, datasets, tools and App instances in various fields such as NLP, CV and audio, but also supports online operation, collaborative development and secure hosting.

HuggingFace's main functions

HuggingFace boasts a comprehensive feature set, covering the entire value chain from data analysis to model development and application deployment. The table below summarizes its core products and modules, along with representative descriptions:

| Components | Function Overview | Quick Links |

| Hugging Face Hub | Models, datasets, Spaces hosting, community collaboration | Hub Documentation Entry |

| Transformers library | Supports pre-training and inference of text, images, and audio. | Transformers Guide |

| Datasets library | Browsing, downloading and managing high-quality standardized datasets | Datasets Guide |

| Tokenizers | Highly efficient word segmentation and sub-word splitting, adaptable to multiple languages. | Tokenizers Guide |

| Diffusers | Image/video diffusion models are a core component of generative AI. | Diffusers Introduction |

| Evaluate | Provide model evaluation tools | Evaluate document |

| Gradio | Build an AI Web UI Demo in Seconds | Gradio documentation |

| Safetensors | Secure and efficient weight file format | Safetensors Description |

Key Highlights and Features

1. HuggingFace Hub:

With Git at its core, HuggingFace Hub supports the hosting, retrieval, and version management of models, datasets, and Space Apps. Users can not only upload and manage models and resources for free, but also collaborate with developers worldwide on iterations.

2. Transformers library:

This is the top choice for tens of thousands of development teams in fields such as NLP. It covers BERT, GPT, T5, Llama, StableLM, etc.Pre-trained large modelsIt supports efficient functions such as text classification, summarization, entity recognition, text generation, machine translation, image classification, and speech recognition. It supports mainstream frameworks such as Python/PyTorch, Tensorflow, and JAX.

Related links:Transformers library details

3. Datasets library:

It supports tens of thousands of datasets from NLP, CV, audio and other scenarios, and provides intelligent filtering download, one-click loading (streaming access), and also allows custom upload of datasets to build personalized AI training pipelines.

See example:Datasets browsing

4. Tokenizers:

Our self-developed high-performance word segmentation engine, coupled with deep learning text analysis, greatly improves training efficiency and international adaptability (such as word segmentation methods like BPE, WordPiece, and SentencePiece).

5. Diffusers and the field of generative AI:

For generative AI, the Diffusers module supports Stable Diffusion, ControlNet, DALL-E, and other technologies, making it suitable for innovative applications in text-to-image, video, and audio generation.

Entrance:Diffusers library

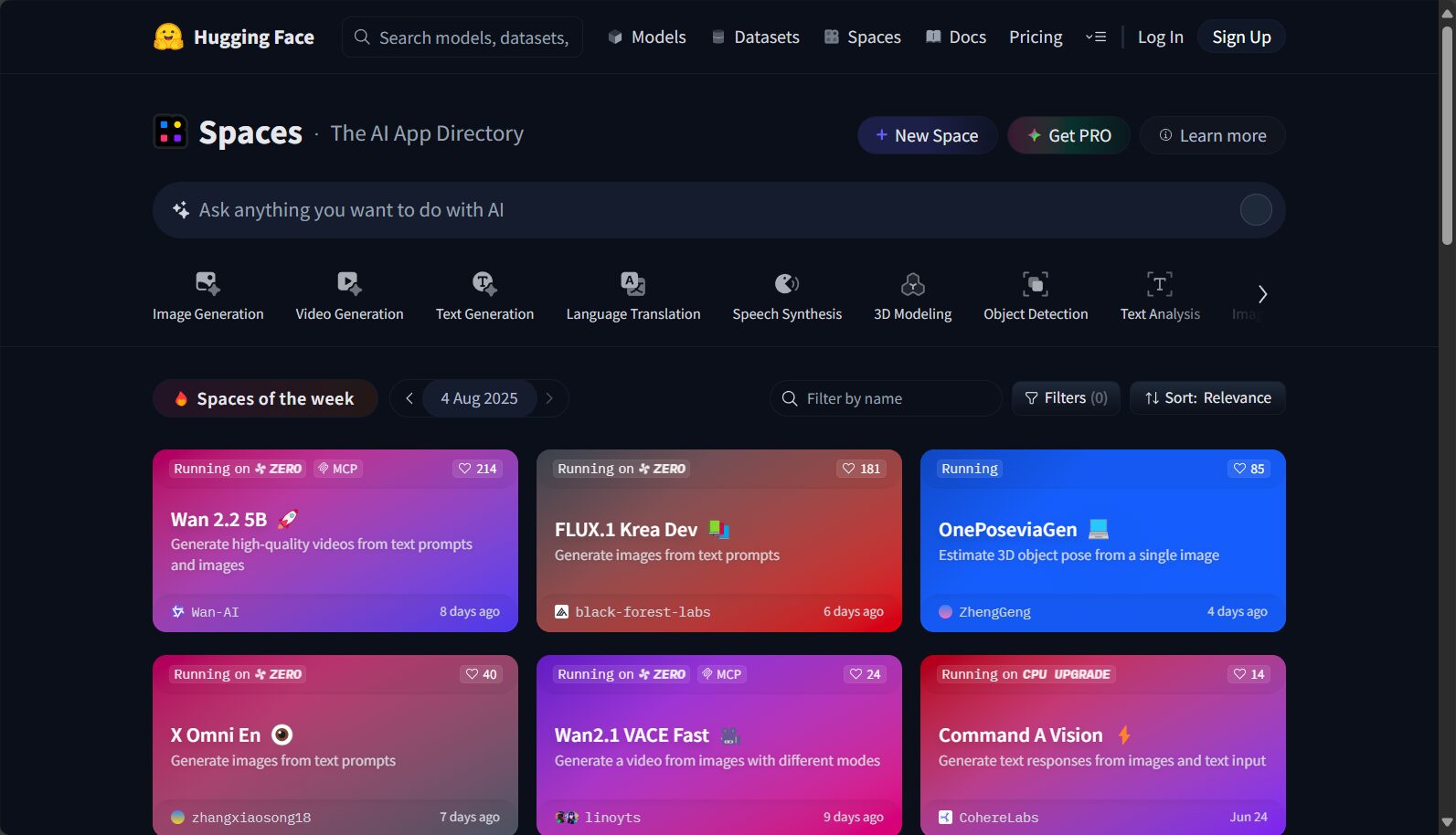

6. Spaces & Online Demo Room

The platform has tens of thousands of built-in Spaces applications (low-code/online demos/mini-programs) that allow users to try out text generation, image generation, question answering, audio recognition, and other functions online.

access:Spaces Demo

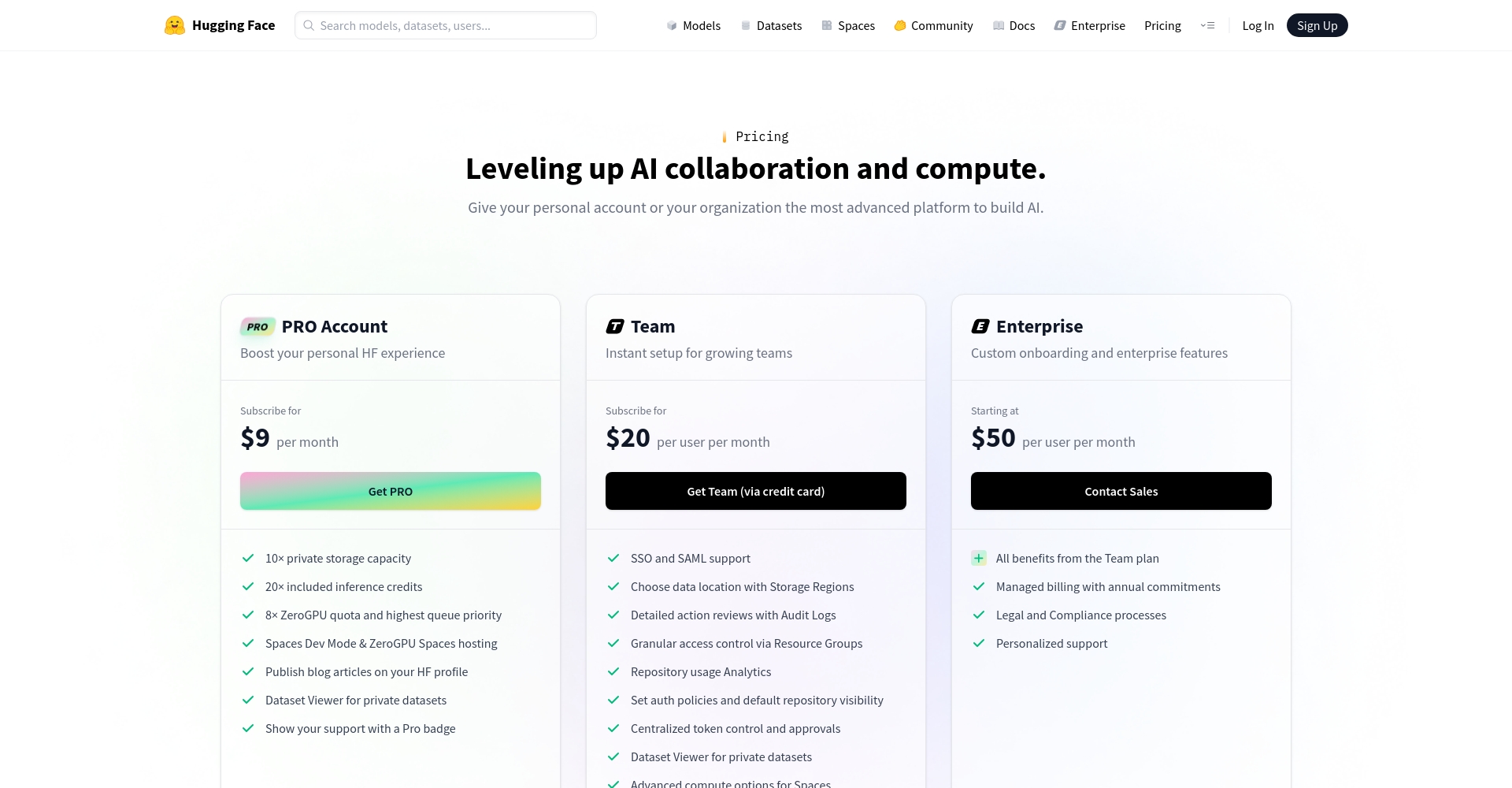

HuggingFace Pricing & Solutions

Most of HuggingFace's features are relevant to both individuals and researchers.It is free to use, but offers flexible paid options for more advanced needs and enterprise scenarios.

Detailed pricing document:https://huggingface.co/pricing

The main options are compared below:

| Plan type | Cost (USD/month/user) | Main features |

| Free plan | $0 | Basic Hub access, model/dataset download, and open-source community participation |

| Pro | $9 | Increased API call limits, trial of advanced inference tools such as ZeroGPU, and early access to new features. |

| Enterprise Hub | $20 | Enhanced privacy, permission configuration, enterprise security, and data isolation support |

| Spaces Hardware | $0 starting/hour | Dynamic expansion of CPU/GPU/accelerator card resources, supporting large-scale computing experiments. |

| Inference Endpoints | Charged based on usage | Cloud-based real-time model inference service, automatic elastic scaling |

Enterprises can access private deployments, customized APIs, and specialized security encryption and compliance protection as needed.

Detailed price reference:Pricing Explanation

How to use HuggingFace

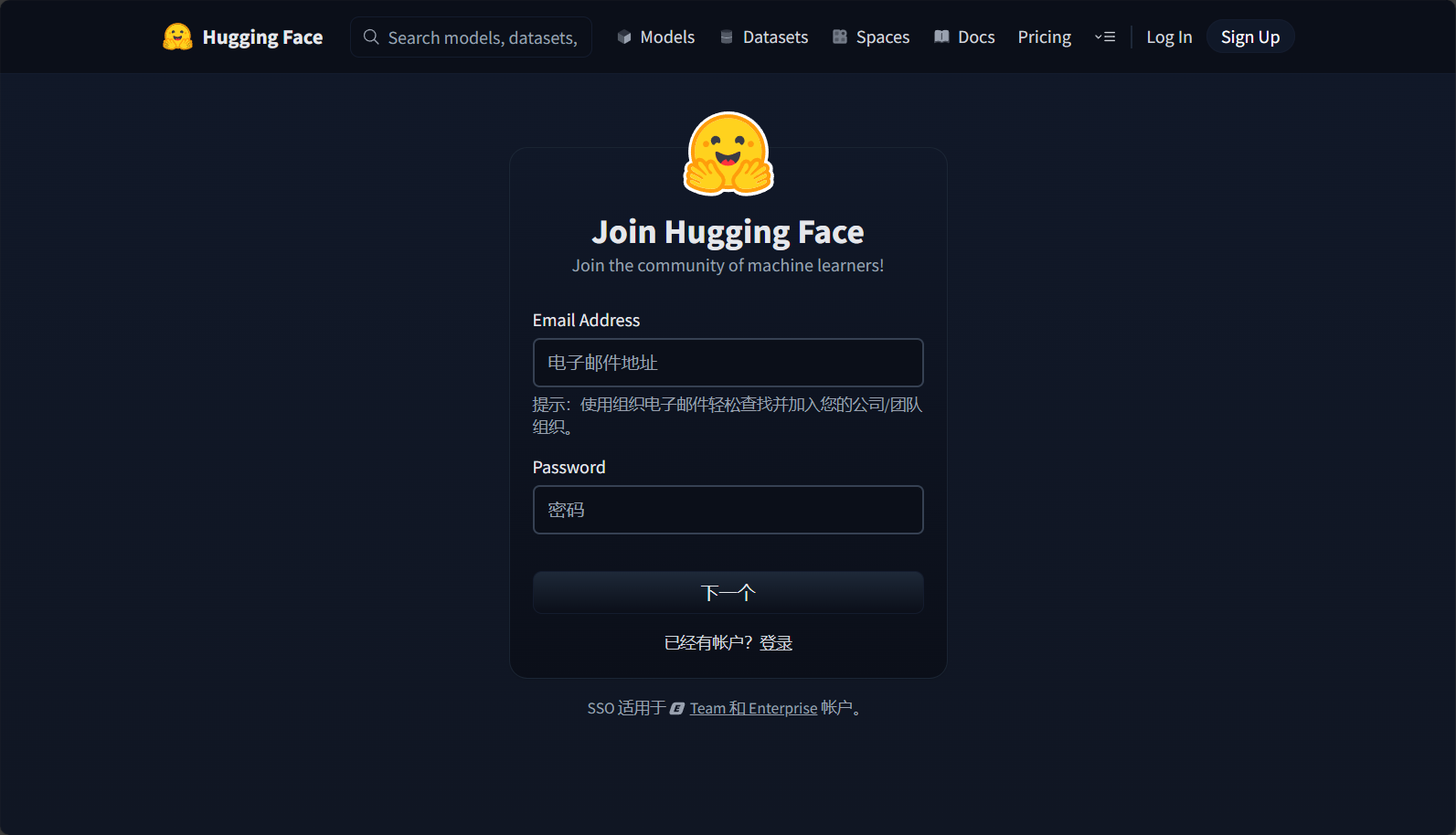

1. Account Registration and Environment Preparation

– Register an account:Registration page

– Get API Token:Token settings page

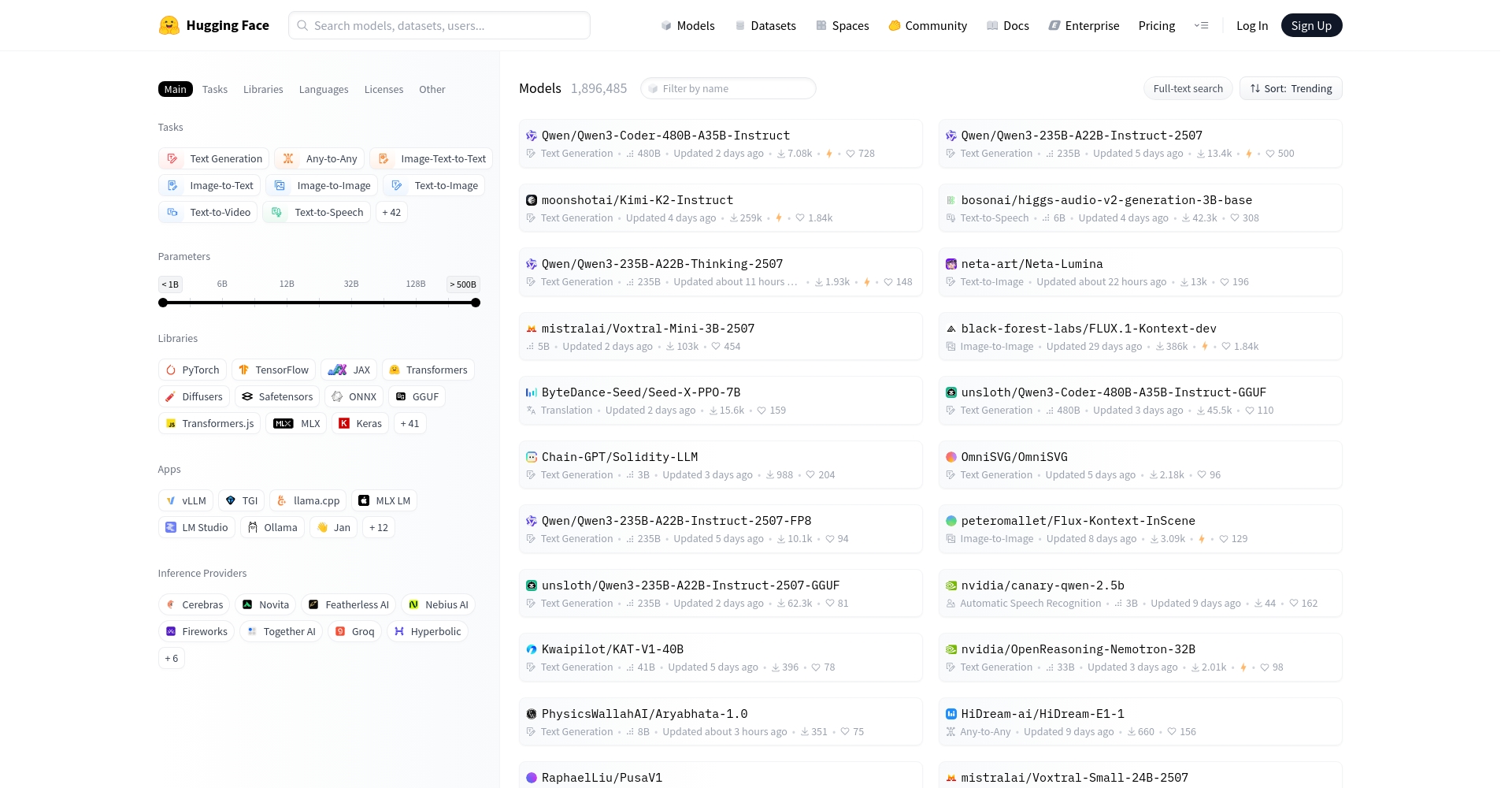

2. Finding and loading models/datasets

- passHub HomepageEnter keywords for intelligent search or use filters to filter by model category and author.

For Python users, a single line of code can automatically load and fine-tune models, such as text generation (using the `transformers` library as an example):

from transformers import pipeline classifier = pipeline('sentiment-analysis', model='distilbert-base-uncased-finetuned-sst-2-english') result = classifier("HuggingFace is really powerful!") print(result)

– Loading dataset reference:

from datasets import load_dataset dataset = load_dataset("imdb") print(dataset["train"][:2])

3. Download and Local Deployment

HuggingFace supports direct webpage download, command-line download (huggingface-cli), git clone, or batch synchronization of models and datasets using the API/SDK.

For detailed operating instructions, please refer to [link/reference].Official Guide及 HF-Mirror Image Acceleration Explained。

4. Experience Spaces and the Demo

No coding experience required; simply launch the Spaces app and try out various AI training models online.

Entrance:https://huggingface.co/spaces

5. Private Model/Enterprise Collaboration

Enterprises can build their own Private Hub, configure multi-level permissions and data isolation online, and collaborate efficiently.

Who is HuggingFace suitable for?

The HuggingFace platform is suitable for almost all user groups who are interested in AI innovation:

- Researchers:Facilitates model reproduction, academic exchange, and verification of cutting-edge technologies.

- AI algorithm engineer/developer:Facilitate model design, fine-tuning, online deployment, and version management.

- AI application companies/teams:Secure and efficient customized implementation and SaaS service capabilities

- Faculty and students:Abundant learning resources, certification exams, and practical project guidance.

- Low-code/business users:Easily utilize mature models for rapid prototype verification or actual product development.

| User type | Recommended Function Module | illustrate |

| Scientific Research/Universities | Hub, Transformers, Datasets, etc. | Paper Reproduction and Rapid Experimentation |

| enterprise | Private Hub, API, Endpoints | Data privacy and commercial deployment support |

| Startups/Developers | Spaces, Demo Application | Rapid application innovation |

| beginner | Spaces Experience, Teaching Materials | Low-barrier AI entry |

HuggingFace's profound impact on the artificial intelligence industry

HuggingFace is an irreplaceable infrastructure for the AI open-source ecosystem.As of 2024, the platform hosted over 900,000 models and more than 200,000 datasets, with over 1 billion calls annually. It has promoted the democratization and technological upgrading of large models; for example, it has led or been deeply involved in the open-source release of BLOOM, Llama, and Stable Diffusion.

Its advantages are prominent:

- Lowering the barrier to entry for AI training models will enable SMEs and individuals to equally enjoy top-tier AI capabilities.

- It has formed a complete technology stack covering data, models, evaluation, and deployment, which empowers industries such as cloud computing chips, algorithm innovation, and education and training.

- A strong community atmosphere and cooperation mechanisms (such as the in-depth collaboration with AWS and Meta in 2024) have promoted cross-industry co-creation and the formulation of AI ethics guidelines, which have effectively promoted the sustainable development of AI.

Frequently Asked Questions

1. What types of AI training models can HuggingFace perform?

HuggingFace covers multiple modalities including NLP, CV, audio, and generative AI, and supports various models such as language generation, machine translation, sentiment classification, question answering systems, image recognition, and text-image-audio generation.Virtual assistants, intelligent customer service, content moderation, and AIGC (AI-generated content) creation all benefit from this platform. Examples include the Llama series of large models, BERT, and Stable Diffusion.

2. Is data transmission and model hosting secure? What privacy protection mechanisms are in place?

HuggingFace employs industry-standard HTTPS encryption and version-based access control, and provides enterprise users with Private Hub private storage and data isolation. API calls and model management both support token permissions, access tracking, and customized protection for sensitive data, making it suitable for industries with high security requirements, such as finance and healthcare.

3. Does the platform impose download/access restrictions on users in mainland China? How can I speed up the experience?

Some data or model repositories are "available after application" and require you to download a token from the HuggingFace website to apply for permission.Domestic developers can use the HF-Mirror (https://hf-mirror.com) to greatly accelerate the offline download of models and datasets, and it supports convenient access via command line and API without needing to bypass the Great Firewall.

Please refer to relevant experience.HF-Mirror Tutorial。

HuggingFace has promoted the concept of "AI for the masses".To enable global developers and enterprises to innovate on an equal footing and collaborate rapidly in the wave of the intelligent era.In the future, as the volume and diversity of open-source AI models continue to expand, HuggingFace will undoubtedly continue to lead the transformation of intelligent technologies, empowering industrial digitalization and social intelligence. To experience, learn, and innovate further, log in now!HuggingFace official websiteTo embark on the path of AI innovation.

data statistics

Data evaluation

This site's AI-powered navigation is provided by Miao.HuggingFaceAll external links originate from the internet, and their accuracy and completeness are not guaranteed. Furthermore, AI Miao Navigation does not have actual control over the content of these external links. As of 12:17 PM on August 6, 2025, the content on this webpage was compliant and legal. If any content on the webpage becomes illegal in the future, you can directly contact the website administrator for deletion. AI Miao Navigation assumes no responsibility.

Relevant Navigation

Blackbox AI

Kapian PPT

PowerPresent AI

ImageColorizer

Replit AI

Chronicle

iFlytek Virtual Human