What is VL? In-depth analysis of the most noteworthy AI visual language models of 2025.

VL (Visual Language Model)As one of the hottest trends in the field of artificial intelligence in 2025, it canSimultaneously understand and generate multimodal data such as text, images, and videos.This article starts from...News reporting angleThis book provides a detailed explanation of the VLM model, including its definition, development trajectory, mainstream product overview, core capabilities, industry application prospects, and future technological challenges. It also includes carefully selected tables, rankings, and a list of practical tools to help you systematically master the next generation of VLM technology and its practical value.

Basic definition and development of VL

What is VL? What are its core components?

VL (Vision-Language Model)This is a type of artificial intelligence model capable of simultaneously processing multimodal information such as "images (videos)" and "text". Its typical architecture includes...Visual encoder与Language encoderAfter being fused with multi-layer neural networks and aligned across modalities, the two technologies possess capabilities such as "interpreting meaning from images," "generating images from text," and "answering questions based on images."

Keyword Explanation: VL Key Terms

the term Full name/English meaning VLM Vision-Language Model Visual language models are a core representation of the multimodal capabilities of modern AI. Encoder encoder Transforming images or text into vectors that AI can understand. Multimodal AI Multimodal artificial intelligence AI capable of processing multiple information types (such as images and text) simultaneously

Milestones in the Development of Visual Language Models

The development of the VL model has gone through several stages:

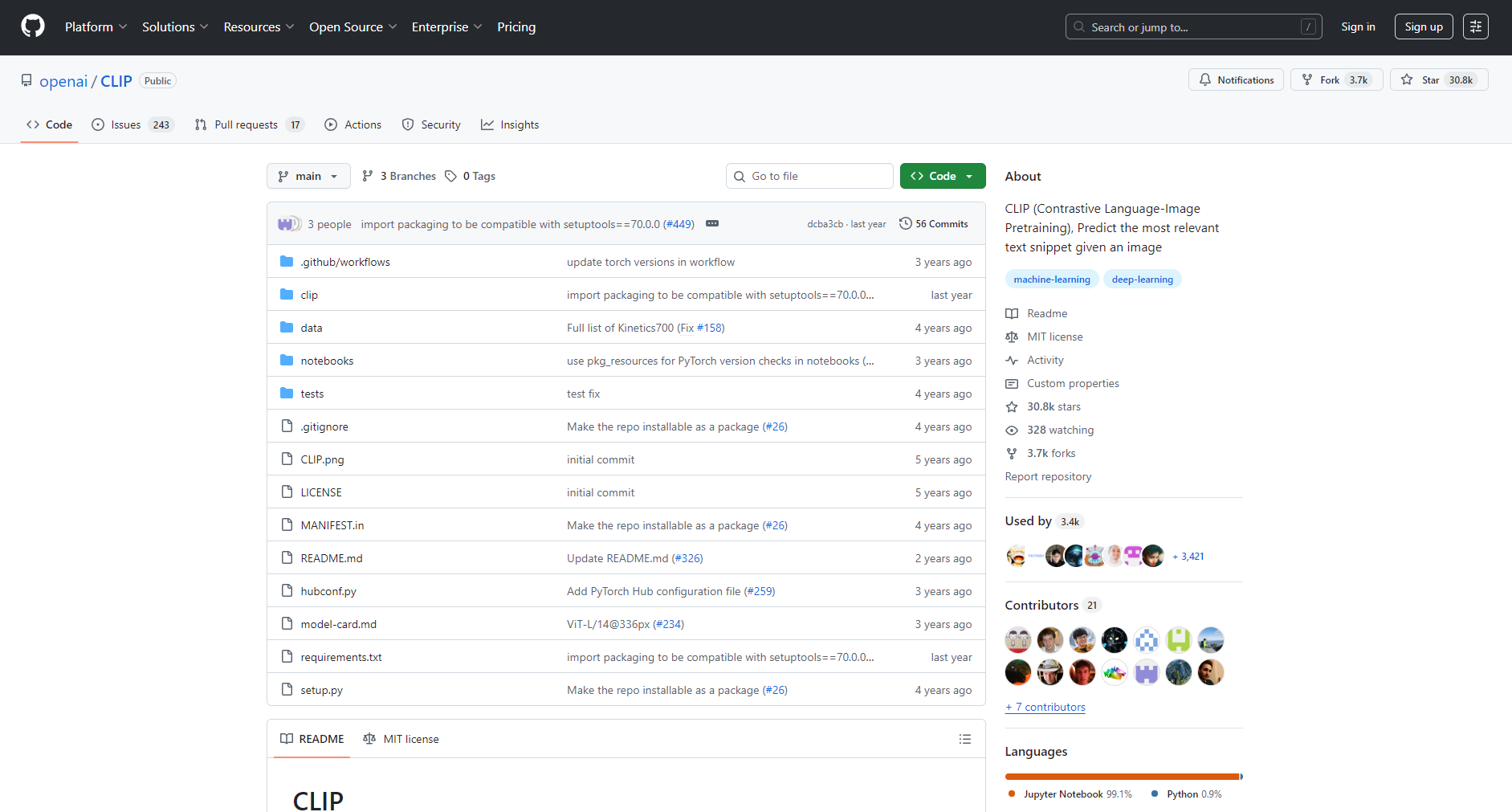

- 2019: OpenAI released the CLIP model, achieving large-scale joint training of text and images for the first time;

- 2022-2024: Generative models such as DALL-E and Stable Diffusion became popular worldwide;

- 2024: OpenAI GPT-4V, Google Gemini 1.5 Pro, and several Chinese VL models were released;

- 2025VL products, with their larger model scale and stronger contextual understanding capabilities, are leading a new round of industrial revolution.

VL Product Representatives and Functional Features

Comparison Table of Representative Visual Language Models in 2025

| Product/Model | Issuing agency | Supported data types | Biggest feature | Application areas | Trial/Experience Entry |

|---|---|---|---|---|---|

| GPT-4o | OpenAI | Text/Image/Audio | Emphasis on all modalities, reasoning, and generation | Smart assistant, office automation | ChatGPT-4o |

| Gemini 1.5 Pro | Text/Image/Video/Audio | Long context, strong scientific and technological innovation capabilities | Education/Search/Content Creation | Gemini | |

| Deepseek-VL | DeepSeek | Text/Image | Excellent performance in Chinese tasks | Chinese Search/Office | DeepSeek-VL |

| Qwen-VL | Alibaba Cloud | Text/Image | Large-scale open source multilingual | Industry AI, Automated Question Answering | Qwen-VL on HuggingFace |

| LLaVA | Community/Multiple Parties | Text/Image | Integrating high-quality visual data from the community | Open source research/application development | LLaVA project |

| Stable Diffusion | Stability | Text-to-image generation (VL fusion) | Customizable and locally deployable | Design/Creativity/Education | Stable Diffusion |

(Some of the above features may be slightly adjusted due to product version updates.)

List of core functions of the VL model

- Image content comprehension(Image Text Description): Automatically generates image content summaries, accurately describing the text, objects, and scenes in the image.

- Questions and Answers Based on Images(VQA, Visual Question Answering): Automatic question answering for images/video content.

- Cross-modal retrievalSupports intelligent retrieval methods such as text-based image search, image-based text search, and video content indexing.

- Text-to-image/image-to-text generation capabilityIt can generate high-quality visual content from text, and can also generate text from images.

- Mathematics/Table/Flowchart RecognitionFormula and table analysis and visualization understanding.

- Multilingual compatibilitySupports input and output in multiple languages, including Chinese and English.

Recommended Key Tools

- Baidu Wenxin Yiyan - Multimodal Large Model

- iFlytek Spark - Multimodal AI

- OpenVLM Evaluation PlatformVL Model Performance Ranking

VL Application Hotspots: Popular Industry Scenarios in 2025

Intelligent content creation and design

- Automatic image matchingNews editors and content e-commerce businesses can use VL to directly generate aesthetically unified image materials with a single description.

- AI drawing & animation productionIt facilitates the customized production of AI-generated comics, animations, illustrations, and more.

Smart office and barrier-free interaction

- Document visual understanding and summarizationAutomatically identify and summarize tables, invoices, PPT screenshots, etc.

- AI assistant can "describe images".“AI narrates scenes/images to assist visually impaired individuals.

Scientific research innovation and professional vision field

- Intelligent analysis of medical imagesVL (Video Level) is used by doctors to provide a preliminary interpretation of images such as CT and MRI.

- Educational SupportSolving exercises on the blackboard and recognizing mathematical formulas, etc.

Smart security and autonomous driving

- Multimodal monitoringText commands can be used to control the camera and trigger video recognition alarms.

- Understanding Traffic Scenarios from ImagesUsing natural language to describe complex traffic images enhances the intelligence of autonomous driving.

Industrial Challenges and Technological Frontiers of Visual Language Models

The main challenges of VL models

- Data privacy and model illusion problems

Inappropriate training data can easily create an "AI illusion," and sensitive information must be strictly controlled. - Challenges in generalizing reasoning and applying it across multiple scenarios

Breakthroughs are needed in small sample sizes, adaptability to new scenarios, and the ability to "understand and reason" in complex multimodal situations. - Computing power and deployment cost pressures

Inference with ultra-large VL models is resource-intensive, and by 2025, local lightweight inference and hybrid routing with large models will become a direction for exploration.

Industry Frontier Report Excerpt

The latest ARXIV papers and OpenVLM benchmarks show that VL models are performing better than traditional VL models.Mathematical reasoning and understanding of complex scenariosWhile the gap in certain aspects is gradually narrowing, challenges remain in "factual consistency" and the ability to process large volumes of general-purpose data.

Latest benchmark evaluation and ranking of VL products in 2025

| Evaluation criteria | Evaluation content | Applicable VL model |

|---|---|---|

| MathVista | Mathematical Reasoning in Images/Forms | Gemini, GPT-4o |

| MMBench | OCR and Spatial Relationship | Qwen-VL, LLaVA |

| VQA, GQA | Image-based question answering/reasoning | Deepseek-VL, GPT-4o |

| OCRBench | Document recognition | Gemini, Qwen |

Recommended open-source benchmarking tools:VLMEvalKit、LMMs-Eval

Conclusion

“"VL"—Visual Language Model—is an indispensable new support for AI development by 2025. It enables one-stop understanding, analysis, and creation of multimodal data such as images, text, audio, and video, driving changes in content creation, office automation, scientific research, medical diagnosis, barrier-free communication, and autonomous driving.

With continuous breakthroughs in fundamental models, the "VL" (Visual Language) model will become the most core and imaginative direction in AI. Enterprises and developers should closely follow new VL tools, seize industry opportunities, and embrace the new digital era brought about by the fusion of machine vision and natural language understanding.

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...