A Comprehensive Analysis of Lamini: A Pioneer in Enterprise-Grade AI Training Model Platforms

In the wave of the AI revolution, more companies are looking to build customized solutions with low barriers to entry.Large Language Model(LLM).LaminiLamini is an enterprise-level platform that has emerged under this trend, providing a one-stop solution from AI model training and optimization to inference services. This article, written in a news report style, will provide an in-depth analysis of Lamini's core value, technological highlights, and application potential for enterprises and developers.

A Comprehensive Analysis of Lamini: A Pioneer in Enterprise-Level AI Training Model Platforms. Amidst the AI revolution, more enterprises are seeking to build customized large language models (LLMs) with low barriers to entry. Lamini, an enterprise-level platform that has emerged in response to this trend, provides a one-stop solution from AI model training and tuning to inference services. This article, presented in a news report style, will provide an in-depth analysis of Lamini's core value, technological highlights, and application potential for enterprises and developers.

(Official website:) https://lamini.ai/)

Lamini Platform Introduction: Lamini is an AI technology company founded in California, USA in 2022. Its core product, the Lamini platform, provides enterprises with training, optimization, and deployment services for open LLM (Large Language Models), emphasizing "extreme simplification of enterprise-grade AI training models." Lamini is compatible with on-premises, private clouds, and public clouds, and fully supports NVIDIA and AMD GPU hardware, accelerating enterprises' leap into the new era of AI. View more information on the official website. Lamini's founding team has a strong academic and industry background—its research achievements cover LLM scaling laws, large-scale AI iteration in production environments, and experience from industry benchmarks such as Amazon, Google, and OpenAI. The platform is open to all enterprises with AI needs, no longer limited to machine learning PhDs or dedicated AI teams, lowering the industry entry barrier.

Lamini's main functions are based on the principles of "low code," "high compatibility," and "high controllability," aiming to help enterprises flexibly own their own AI training models. Its main functional highlights are as follows:

(For detailed development documentation and official demos, please visit [website address])Lamini Docs)

1. Rapid AI training model customization

It supports fine-tuning techniques such as LoRA, enabling high-quality local/cloud-based fine-tuning with just a few lines of code, without the need for ML experts, through its proprietary API and Python SDK. Equipped with optimization paths such as RLHF (Human Feedback Reinforcement Learning) and Prompt Engineering, it significantly improves the accuracy of model generation.

2. Data Generation and Expansion

It features a powerful built-in generator that can automatically expand small batches of data into massive training sets, supporting practical scenarios such as question-and-answer, summarization, and structured information generation. Data validation and automatic cleaning tools significantly reduce the burden of pre-processing data for enterprises.

3. High-speed reasoning and batch reasoning

This high-throughput inference API is ideal for large-scale text and document batch inference and real-time response scenarios. Structured JSON output facilitates deep integration with enterprise systems and significantly reduces application error rates.

4. Flexible deployment in multiple environments

Flexible deployment across on-premises, public clouds (such as AWS, GCP), private clouds, and air-gapped networks. Supports automatic orchestration of GPU clusters, easily handling millions of concurrent training and inference requests.

5. Data and security compliance

Enterprise-grade data isolation and security ensure that proprietary data is never leaked, providing a strong foundation for customers with strong compliance requirements. Management tools allow for customization of logs, permissions, call frequency, and model version backtracking.

Key Features Overview

| Functional categories | illustrate | Technical Features |

|---|---|---|

| Model training and optimization | LLM customization and personalized data fine-tuning | Supports LoRA, RLHF, etc. |

| Data generation and expansion | Small samples generate big data | Prompt Engineering/Automatic Cleaning |

| Reasoning and Integration | Fast API Batch Inference + Structured Output | High throughput/JSON results |

| Deployment and compatibility | Local, cloud, and GPU auto-scaling | Supports NVIDIA, AMD/Multi-cloud environments |

| Safety and Management | Full access control, data isolation, and audit traceability | Enterprise-grade encryption/compliance support |

For detailed API and business scenario examples, please refer to the following documentation.Lamini Official Documentation。

Lamini's Pricing & Solutions: Lamini offers flexible pricing and service plans tailored to different types of businesses. Its main pricing models are summarized below:

| Scheme type | Main object | Services obtained | price |

|---|---|---|---|

| Free Package | Developers/Small-scale experiments | 200 inferences/month + limited optimization attempts | $0 |

| Corporate Packages | Medium to large teams/enterprises | Unlimited Inference/Optimization/Private Model Weight Download/Expert Support | Customization available (please contact sales). |

Free registration includes $20 trial inference credits, suitable for beginners to experience the enterprise version. It offers unlimited model type and capacity, fully customizable throughput and latency requirements, and allows direct download of fine-tuned model weights. Credit card payment and corporate billing terms are supported. Detailed settlement methods and pricing are subject to the latest announcements on the official website.

Special Note: For customers requiring high-frequency batch AI model training and large-scale inference, Lamini offers in-depth enterprise-level cooperation options such as GPU reservation, dedicated bandwidth, and customized security hardening. Click to contact the Lamini team for a customized quote.

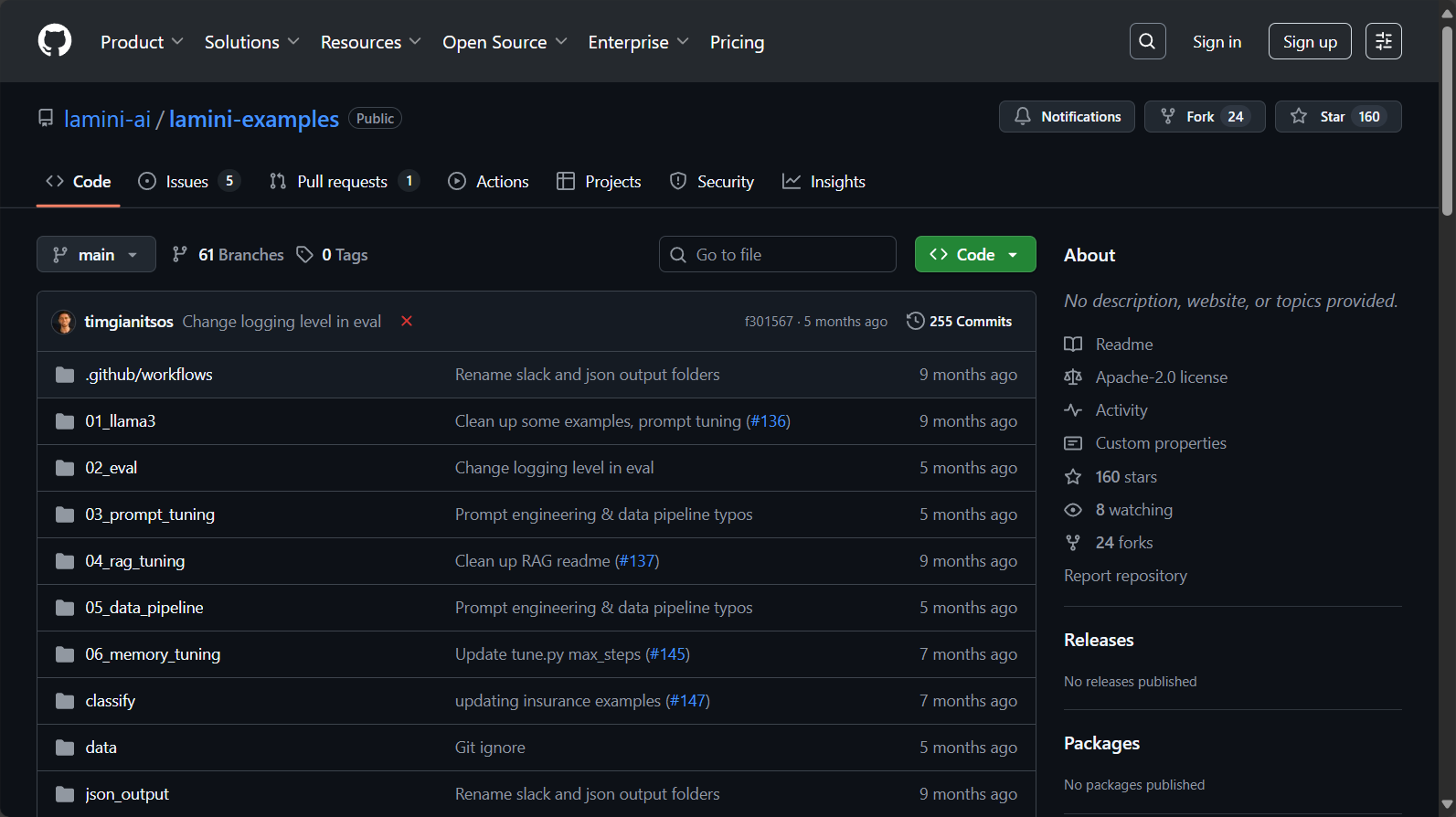

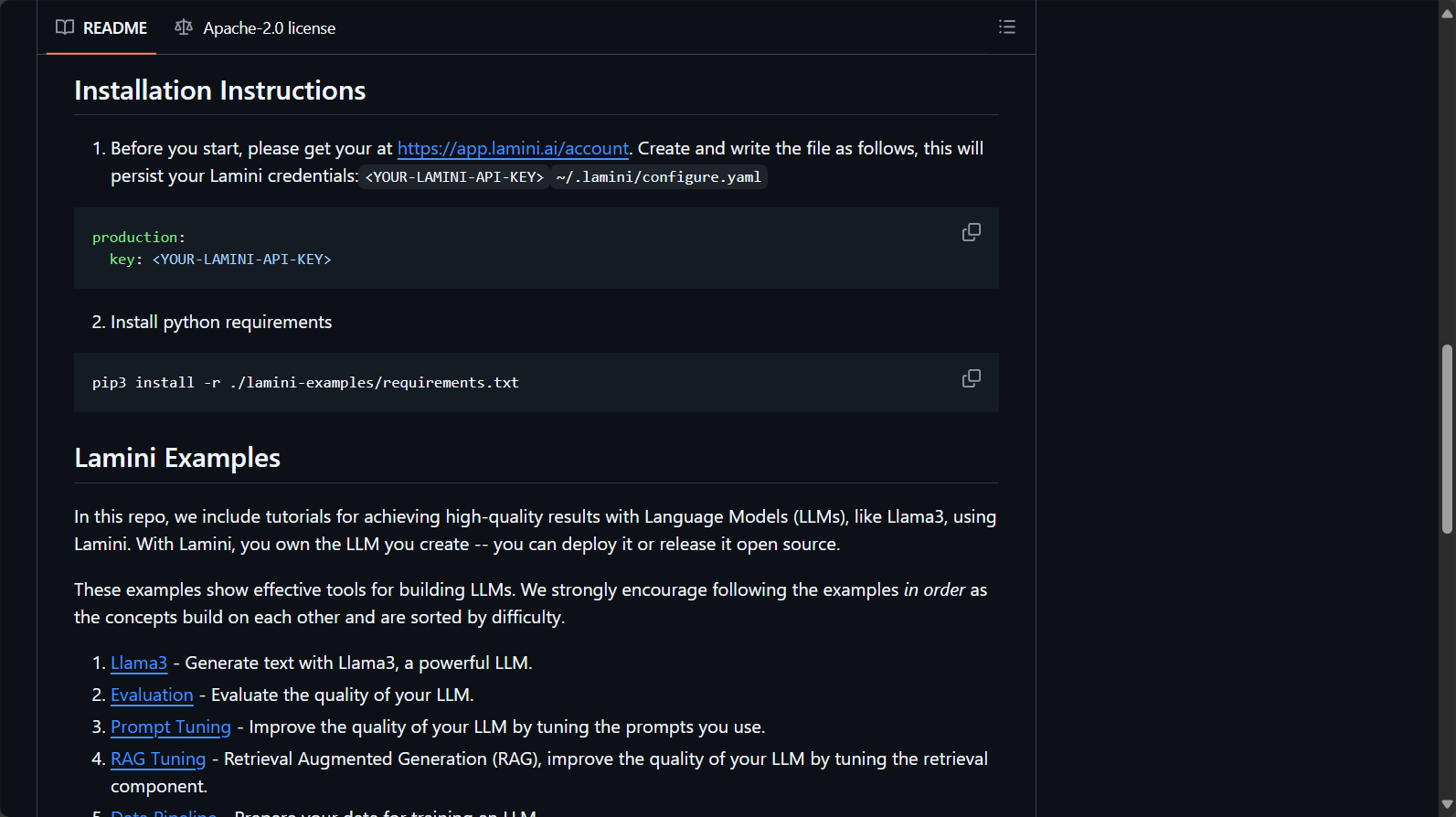

How to use Lamini 1. Account registration and API application (Go to hookup face)LaminiExperience it online. Supports Python SDK and RESTful API integration, allowing for quick integration by both small tool engineers and enterprise IT professionals.

2. Basic Model Customization Process

Upload/configure enterprise-owned data (such as knowledge bases, contracts, FAQs, etc.). Expand training samples using the platform's automatic data generator. Select/upload a base LLM (the platform is compatible with various mainstream open-source/self-developed models). Call the API or use the Python SDK.

from lamini import train_llm model = train_llm(task='qa', data=your_data, …)

Once fine-tuned, the model can be immediately deployed for inference, or the weights can be exported for local deployment.

3. Integration and secondary development

Lamini can serve as an internal AI platform, integrating internal business data to provide low-code extensions for scenarios such as knowledge-based Q&A, document generation, and intelligent customer service. It supports high-concurrency asynchronous batch requests and features a detailed team collaboration and version management mechanism. If you encounter any problems during use, you can ask questions through the developer documentation community or contact official technical support.

Lamini's target audience and main target groups

| Target audience | Reasons and application scenarios |

|---|---|

| Enterprises/Large and medium-sized institutions | Customized AI needs, including handling massive amounts of proprietary documents and ensuring high data security, are required. |

| AI startups | Need to quickly build petabyte-scale models and agilely deploy new features |

| IT/Digital Team | Used for internal knowledge base dialogue assistants, intelligent operation and maintenance, enterprise process optimization, etc. |

| AI education and research institutions | Validating new model theories and generating teaching or research paradigms. |

| AI outsourcing service provider | Achieving rapid delivery of LLM in multi-user, multi-business scenarios |

Typical industry cases

Finance: Automatic report generation, anti-fraud risk control text analysis, intelligent investment advisory; Healthcare: Case interpretation, document summarization, automatic generation of popular science content; Retail/E-commerce: Product copywriting generation, customer service robots, customer sentiment analysis; Manufacturing: Process document writing, knowledge base maintenance, dialogue, compliance material collection.

Why has Lamini become a leading AI training model platform? The deep integration of technological innovation and industrial application is Lamini's greatest strength.

Customized High Precision: Memory Tuning, LoRA optimization, and Experts in-the-Loop multi-dimensional improvements enhance task accuracy on enterprise data. Ultimate Inference Resource Utilization: 32x higher GPU utilization under equivalent hardware, supports AMD GPUs, breaking NVIDIA's monopoly. Comprehensive Deployment Capabilities: Flexible deployment options include local, offline, and hybrid cloud deployments, ensuring data controllability and guaranteeing enterprise IT security and compliance. Developer-Friendly: Based on developer-centric APIs and a simple SDK, with comprehensive documentation, lowering the barrier to AI implementation.

Comparison with common open-source self-training frameworks/cloud API one-stop capabilities:

| Dimension | Lamini | Huggingface | OpenAI platform | Fine-tuning the difficulty | compatibility | Inference throughput | Enterprise specificity | Data full control |

|---|---|---|---|---|---|---|---|---|

| ☆☆☆☆☆ | easy | higher | API only | 低 | 高 | Extremely high | Extremely strong | Excellent |

In-depth understanding of Lamini technical documentation

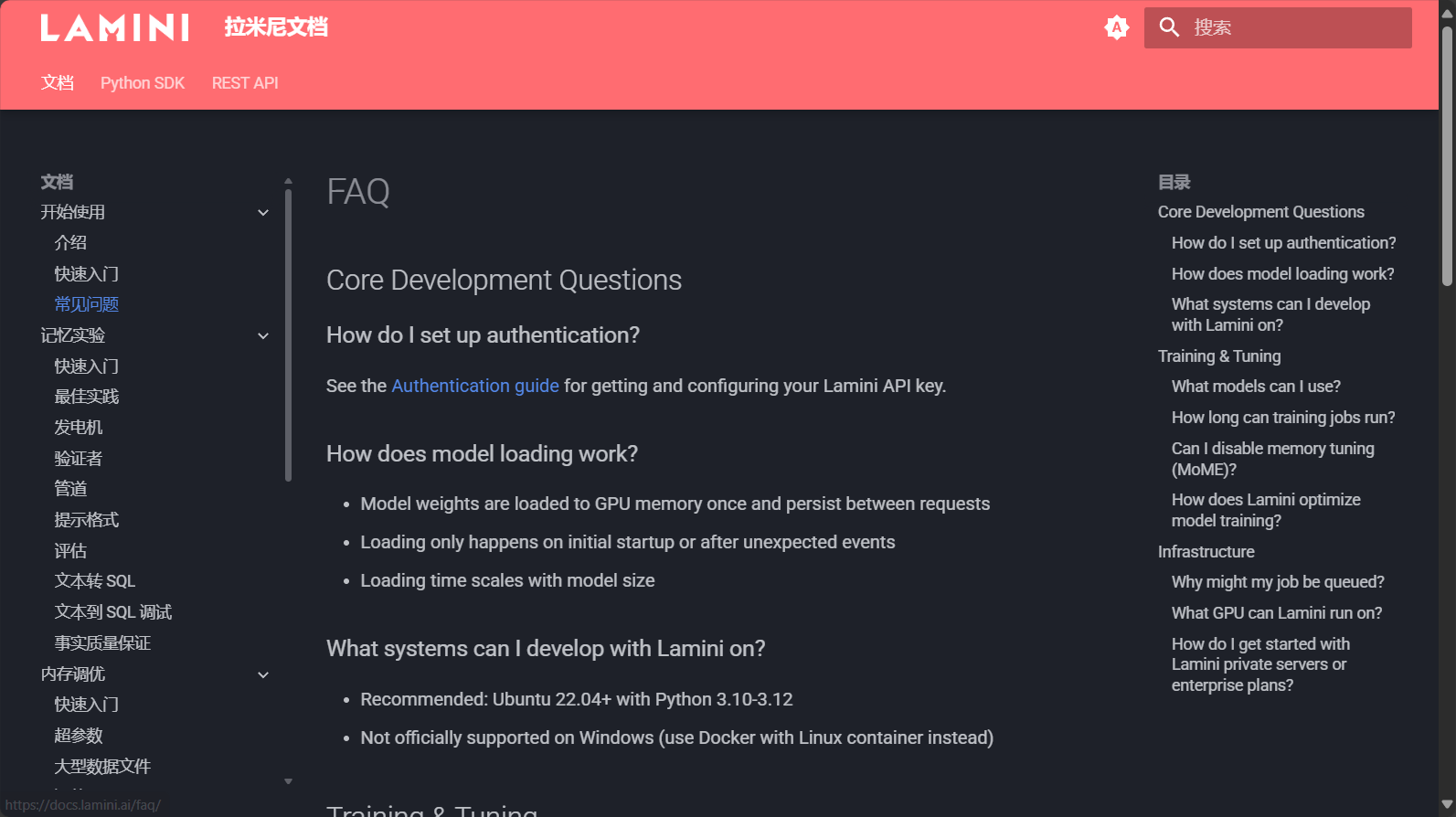

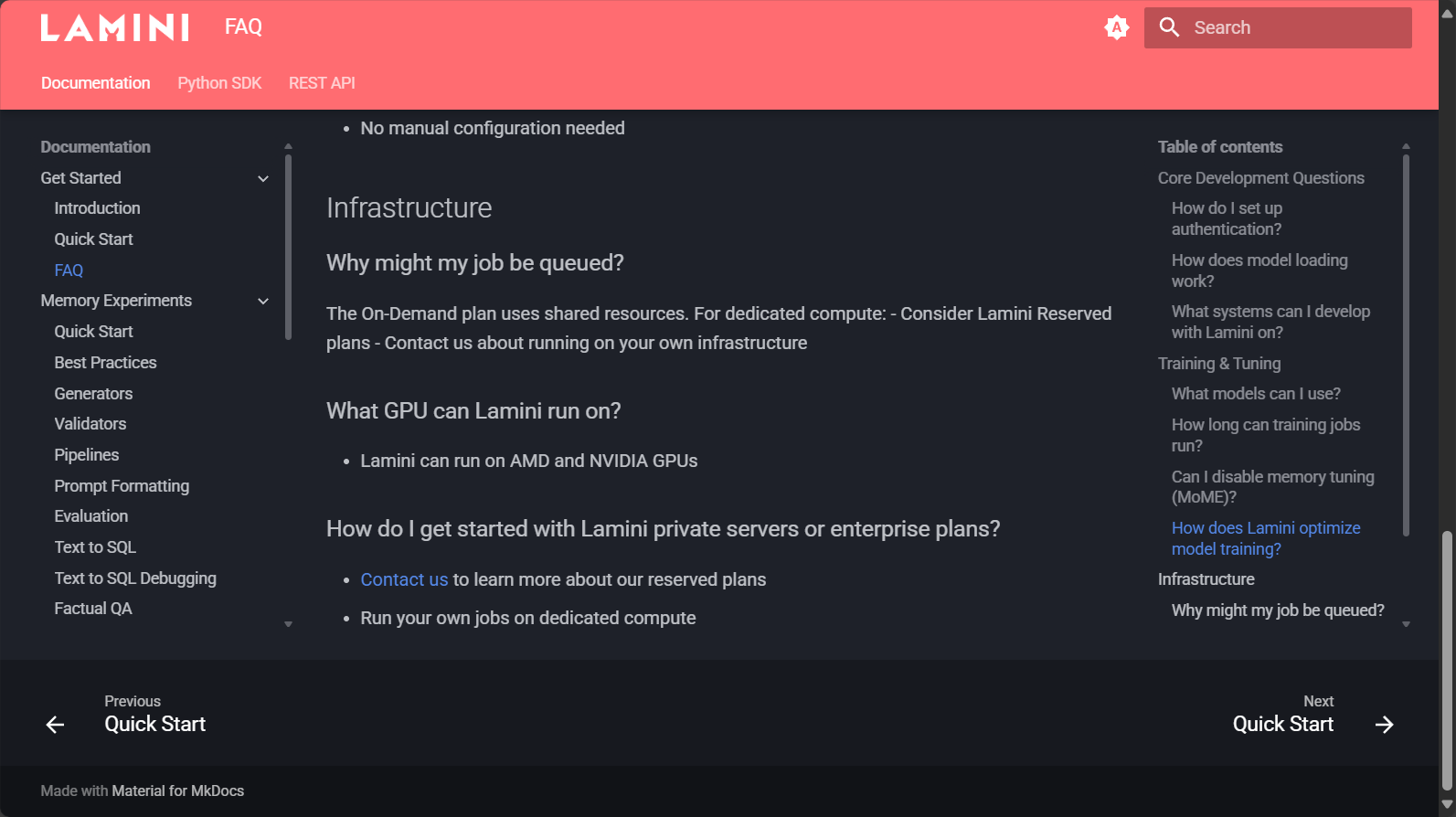

Frequently Asked Questions 1. Which AI models does Lamini support for training and fine-tuning? Lamini is completely model-agnostic, supporting self-training, tuning, and inference of mainstream open-source LLMs (such as Llama, GPT-3/4 family, Mistral, etc.) and enterprise-developed basic models. It offers seamless compatibility and switching, eliminating concerns about limitations such as "only supporting platform-developed models." For more details, please see the official website's list of supported models.

2. How is Lamini deployment and data security guaranteed? The platform natively supports local private deployment and isolated network environments, ensuring all enterprise data and model parameters remain securely within the platform's own infrastructure. The platform also features detailed data encryption, access control, and security auditing mechanisms, making it suitable for industries with extremely high sensitivity to data privacy and compliance, such as finance and healthcare. Access the "Self-Hosted" deployment guide.

3. What are the core differences between Lamini and open-source self-trained or pure API cloud models?

Lamini advocates for "low-barrier, customizable models," enabling non-AI professional teams to quickly acquire their own AI training models and download weights, manage them independently, and upgrade them. It's not a "disposable API," but rather emphasizes enterprise digital asset security and the sustainable growth capabilities of AI. Furthermore, Lamini integrates the entire process, including fine-tuning, data generation, inference interfaces, access control, and monitoring, significantly reducing IT integration workload. It outperforms research tools that are "easy to deploy even with training" and avoids the risks of relying entirely on third-party cloud AI.

In conclusion, Lamini is driving AI training model technology towards an era of enterprise-level autonomy. It not only solves the pain points of data security, performance, and rapid deployment for large enterprises, but also significantly narrows the technological gap between emerging teams and AI giants. For Chinese companies aiming to dominate the AI business, embracing a controllable and self-evolving AI training model platform like Lamini is undoubtedly one of the most forward-thinking strategic choices.

Want to experience the intelligent power of Lamini? Visit its website now.Official websiteUshering in a new era of customized enterprise AI models!

data statistics

Data evaluation

This site's AI-powered navigation is provided by Miao.LaminiAll external links originate from the internet, and their accuracy and completeness are not guaranteed. Furthermore, AI Miao Navigation does not have actual control over the content of these external links. As of 12:02 PM on July 26, 2025, the content on this webpage was compliant and legal. If any content on the webpage becomes illegal in the future, please contact the website administrator directly for deletion. AI Miao Navigation assumes no responsibility.

Relevant Navigation

Bedtimestory.ai

Let's Enhance

DeepSwap AI

Artflow

Stable Doodle

Magic Sound Workshop

Auto-GPT