What is RLHF? A key technology that cannot be ignored in AI training in 2025.

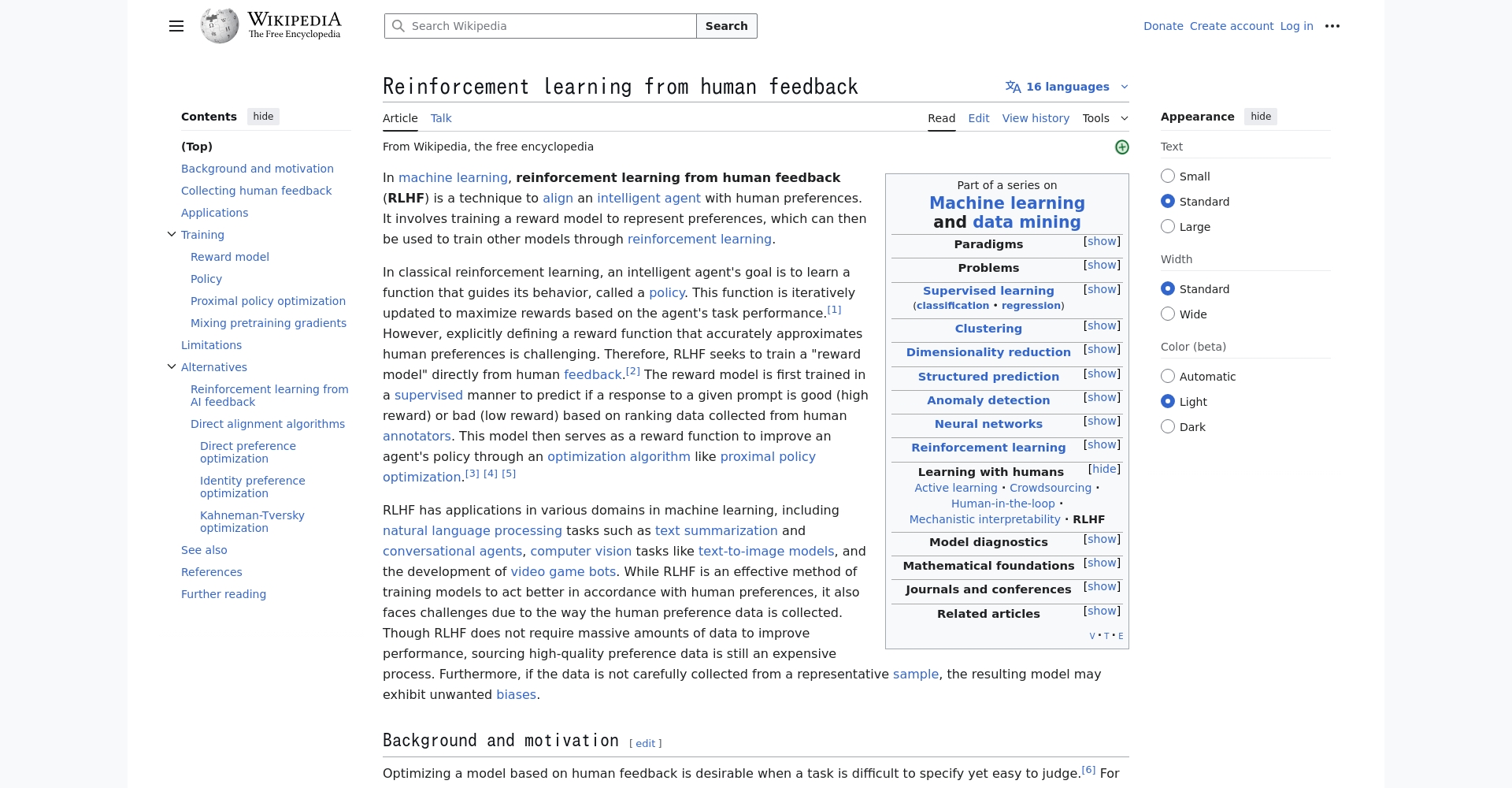

Human-feedback-based reinforcement learning (RLHF)It will become an indispensable core technology for large-scale model training and intelligent upgrades in the AI field by 2025. This article provides a comprehensive overview.RLHFBasic principlesDifferences from traditional RL, key training processes and mainstream application toolsThe study delves into technical challenges such as data bottlenecks, reward model biases, and computing power barriers, and focuses on following up on key issues.HybridFlow parallel training, COBRA consensus mechanism, personalized RLHF and other latest breakthroughs in the field by 2025Looking ahead, RLHF is driving the transformation of AI towards greater safety, controllability, and alignment with diverse values, representing an essential path for AI to evolve and truly "understand you."

RLHF Basics and Technical Principles

Definition and core process of RLHF

RLHF (Reinforcement Learning Based on Human Feedback)It integrates human evaluation mechanisms with reinforcement learning algorithms to achieve a high degree of alignment between AI decisions and human expectations. It includes...Pre-training、Reward Model Training、Reinforcement learning optimizationTypical links such as these are the driving forceGenerative AI large models such as ChatGPT and GeminiThe key driving force for implementation.

Differences between RLHF and traditional reinforcement learning

| Comparison Dimensions | Traditional Reinforcement Learning (RL) | RLHF |

|---|---|---|

| Reward signal | Environment settings, automatic values | From human ratings/preferences |

| Target | Maximize environmental rewards | Maximize "human subjective preferences"“ |

| Alignment capability | Difficulty in capturing complex human needs | Alignment with human values |

| Vulnerability | The problem of rewarding hackers is serious. | Strengthening supervision can reduce risks |

RLHF effectively compensates for the shortcoming of traditional RL in aligning with complex human preferences.This will help AI better align with actual human intentions.

Applications of RLHF in AI Systems and Large Model Training

Typical training process and application platform

- Human annotation and data collectionHigh-quality manually scored output data.

- Reward Model Construction: Sort and pairwise comparison training reward network.

- RL optimizationUse PPO/DPO, etc. to guide people's preferences.

Mainstream RLHF platforms include:

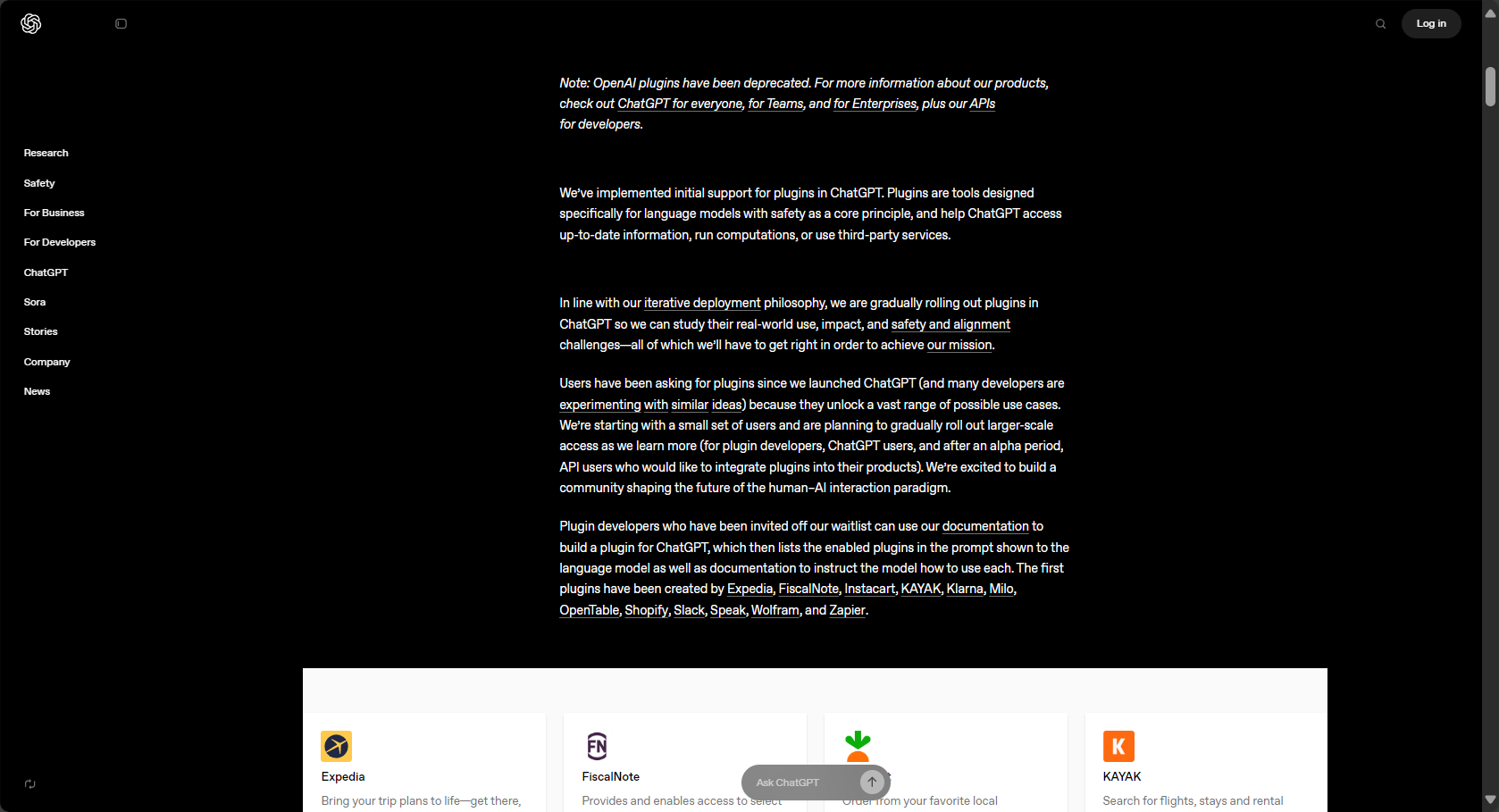

- OpenAI ChatGPT(Strongly aligned landing)

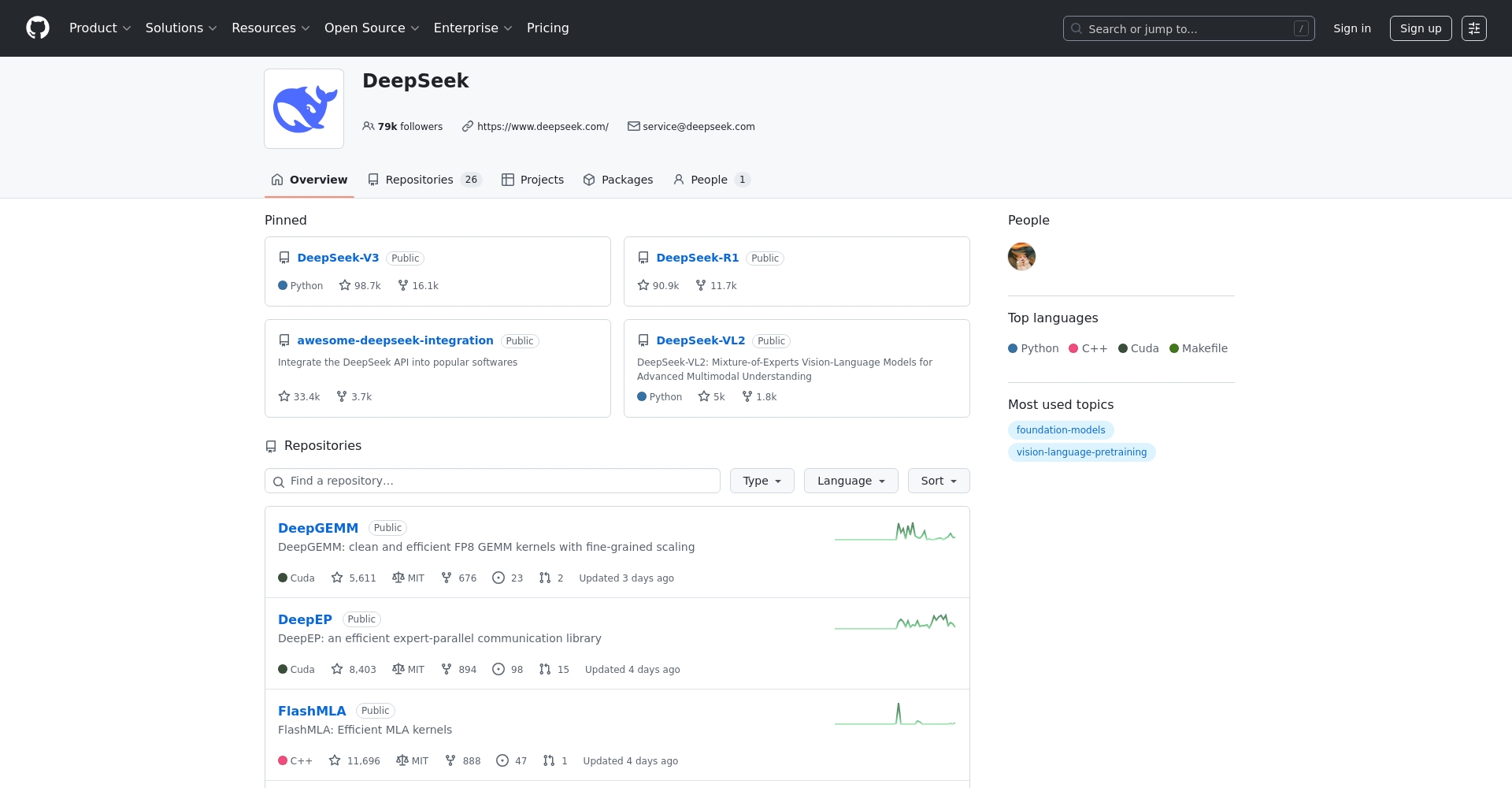

- DeepSeek(Cold Start and Efficient Training)

- Perle.ai(Automated annotation)

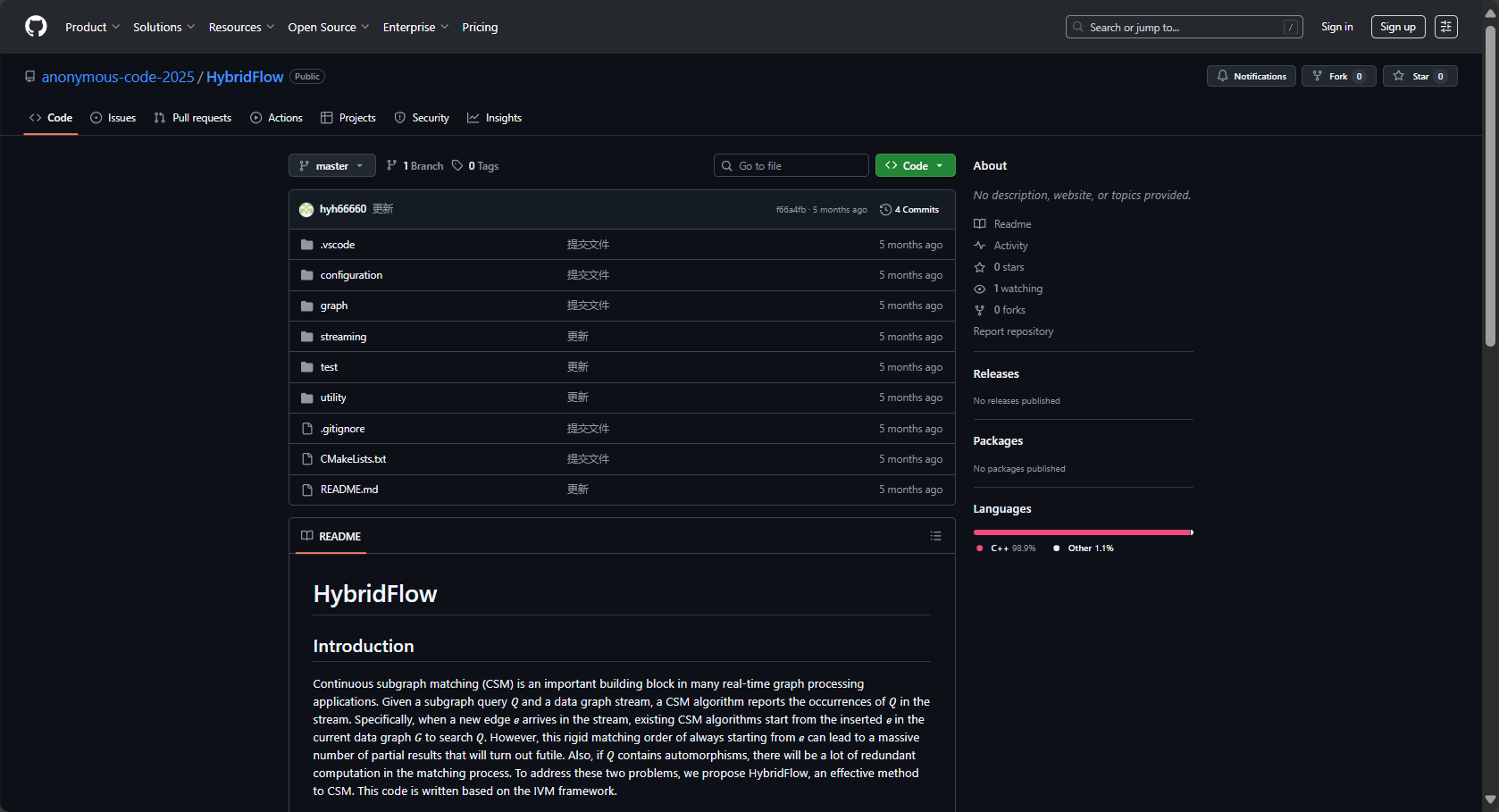

- HybridFlow(Massively parallel training)

Technical Challenge Analysis

- High-quality labeled data is scarceHigh labor costs, many subjective factors, and prone to bias.

- Rewarding hackers and the degradation of basic skillsThe model optimization deviates from the actual expectation.

- Massive computing power and long-term trainingThe barrier to entry for startup teams is high.

See detailsCSDN Frontier Column。

Breakthrough in key technologies for RLHF by 2025

| Research Direction | Key methods | Application effectiveness |

|---|---|---|

| Reward Model Optimization | Contrast training, preference loss | Accelerate training convergence and improve effectiveness |

| Highly parallel training framework | HybridFlow/Pipeline Decoupling | Throughput increased by 1.5-20 times |

| COBRA consensus mechanism | Dynamic aggregation filtering anomaly | Reward accuracy increased by 30~40% |

| Segmented reward mechanism | Fragmentation + Normalization | Optimized speed and smoothness significantly improved |

| Personalized training | Shared LoRA low-rank adaptation | Excellent personalization performance in vertical scenarios |

| Synthetic data combined with expert annotation | Automated tools + manual spot checks | Data fidelity enhancement 60% |

Detailed Explanation of Improvement Directions

- High variance training of reward modelsIt results in faster optimization convergence and more robust strategy algorithms.

- HybridFlowFine-grained pipeline parallelism greatly improves training efficiency.

- COBRA ConsensusEffectively prevent malicious and abnormal feedback from contaminating model rewards.

- Fragment rewards and normalizationContinuously optimize and improve the text.

- Shared LoRAAdapting to user preferences improves performance in micro-sample scenarios.

- Synthetic data + expert annotationThis significantly alleviates data bottlenecks.

RLHF technology integration and development trends by 2025

| Areas for improvement | Technical points | Representative Cases |

|---|---|---|

| Data labeling | Semi-automated, diverse teams | Perle.ai, Synthetic Data |

| Rewards optimization | Multi-task comparison and strategy improvement | COBRA, HybridFlow |

| Training efficiency | Pipeline/Parallel/Cold Start | DeepSeek, RLHFuse |

| Evaluation system | Preference Agency Evaluation | Stanford PPE |

| Personalization | Shared LoRA | Customized services in healthcare, finance, law, etc. |

Industry Applications and Future Prospects

- Open source community promotes the adoption of RLHF(DeepSeek, RLHFuse, etc.)

- Breakthrough in academic innovation(Princeton, HKU mixed-flow training, etc.)

- Industrial-level implementationOpenAI, Google, and ByteDance are building a sophisticated supply chain.

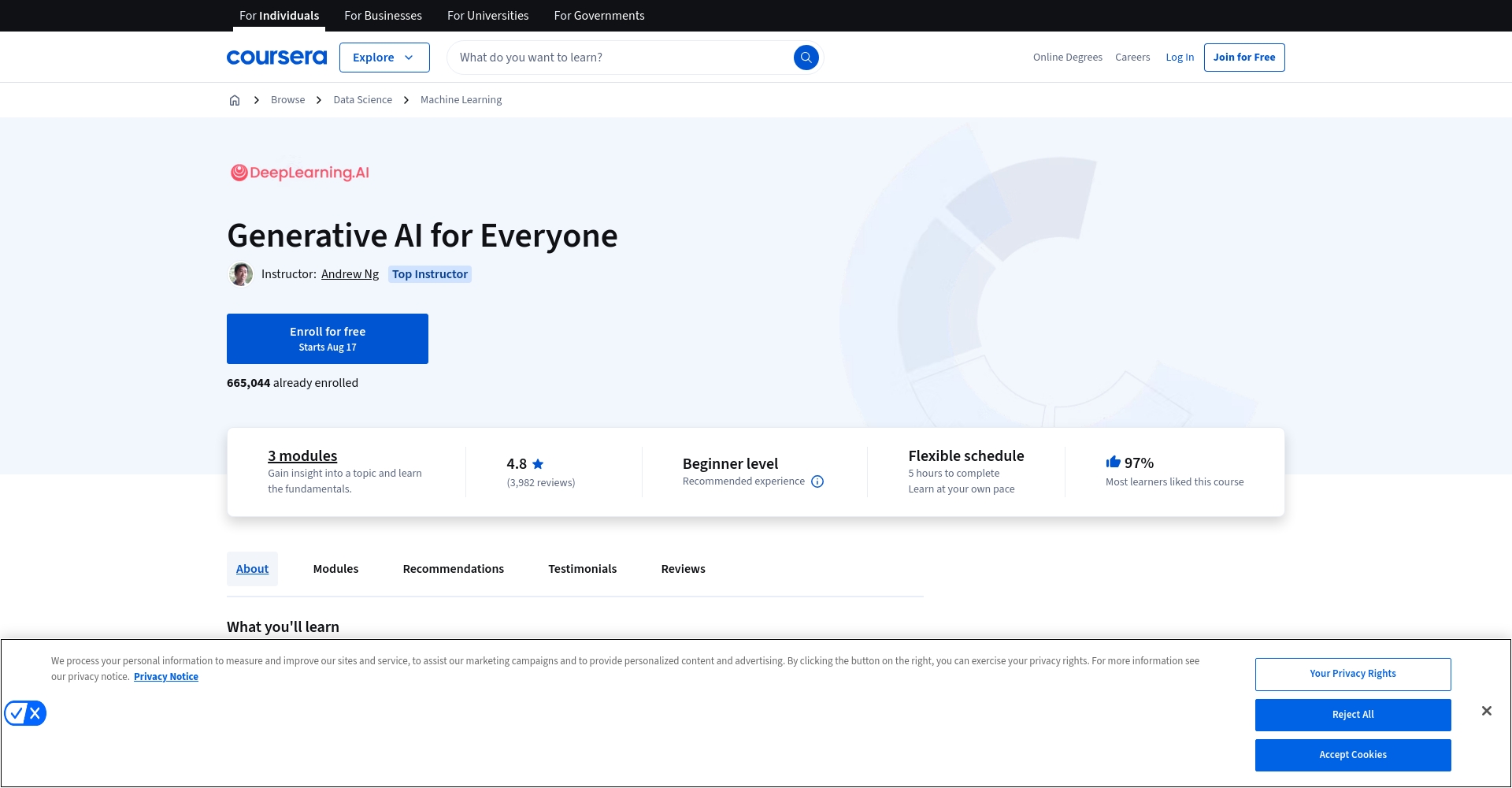

2025 Hot TopicsMultimodal RLHF(Visual, audio)Federal Privacy Protection RLHFIntegration highlights the diverse values of AI, including ethics, safety, and personalization.RLHF has become an indispensable power engine in the training pipeline.We recommend checking out Coursera RLHF courses and well-known open-source projects to stay abreast of the new AI wave!

© Copyright notes

The copyright of the article belongs to the author, please do not reprint without permission.

Related posts

No comments...